Trade-offs: Accuracy and interpretability, bias and variance

Dr. D’Agostino McGowan

Study Sessions

- Monday 7-9p

- Manchester 122

Lab 01

- Knit, Commit, Push often

- Commit and Push all files

- Check on GitHub.com to make sure everything is updating

- You won't see a rendered file

📖 Canvas

- use Google Chrome

Regression and Classification

- Regression: quantitative response

- Classification: qualitative (categorical) response

Regression and Classification

What would be an example of a regression problem?

- Regression: quantitative response

- Classification: qualitative (categorical) response

Regression and Classification

What would be an example of a classification problem?

- Regression: quantitative response

- Classification: qualitative (categorical) response

Regression

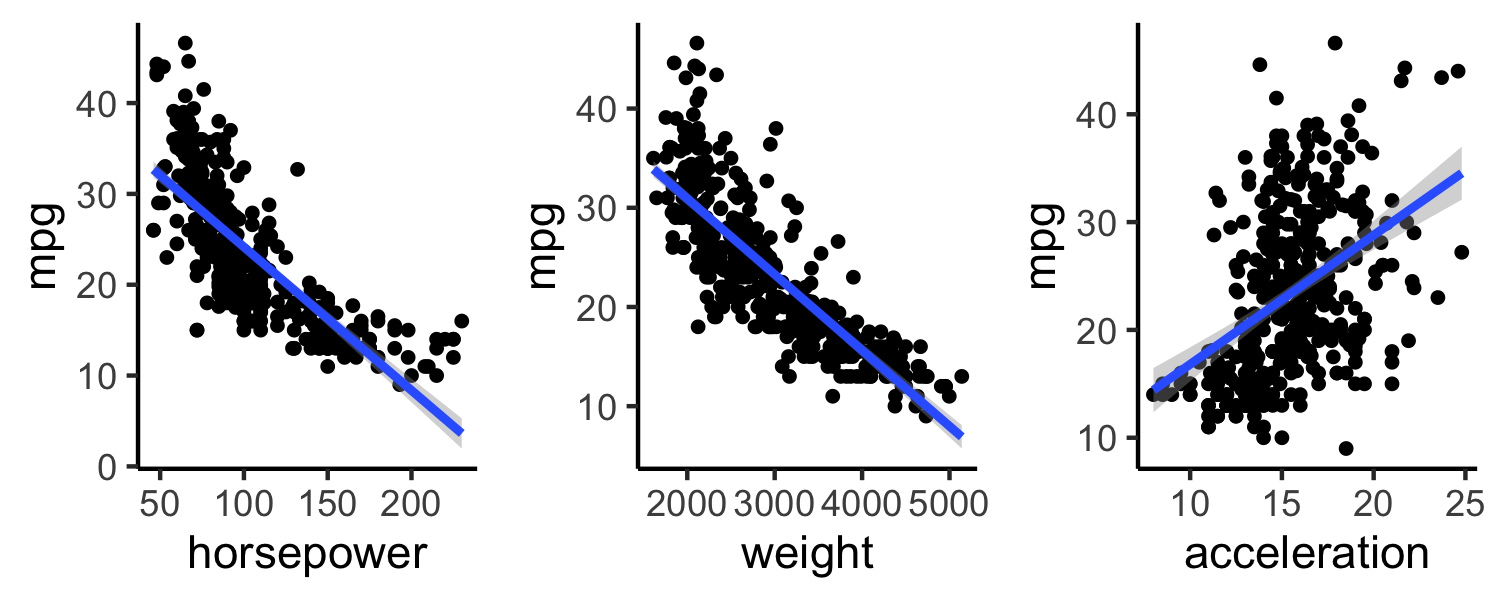

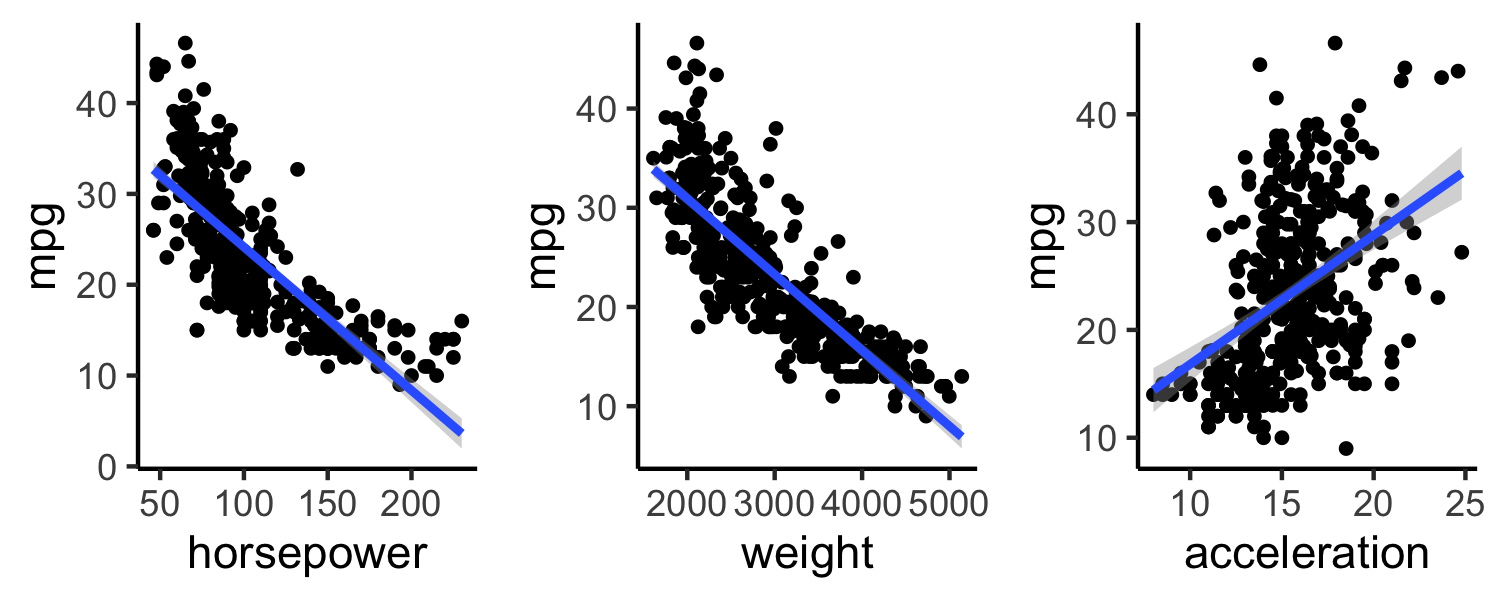

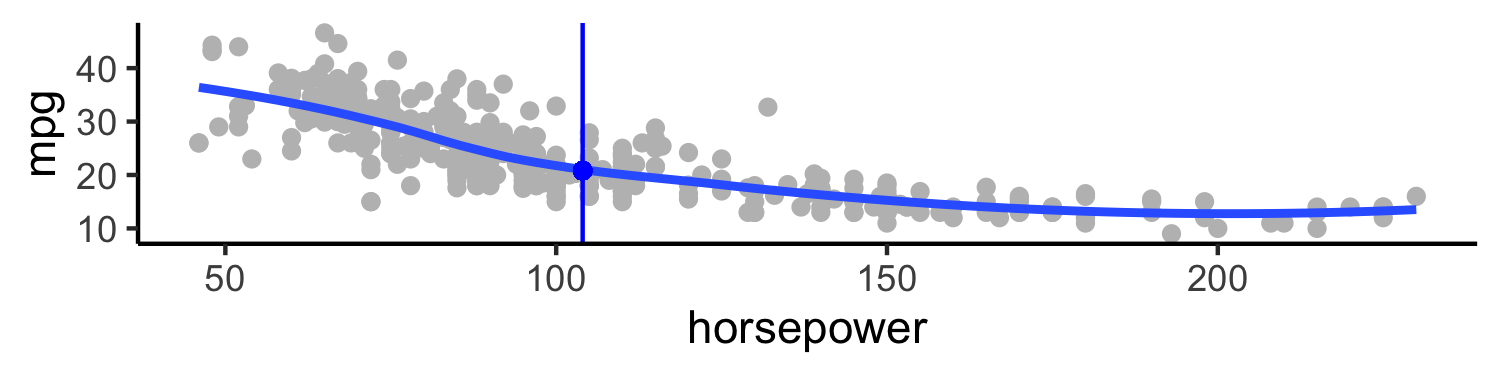

Auto data

Above are mpg vs horsepower, weight, and acceleration, with a blue linear-regression line fit separately to each. Can we predict mpg using these three?

Auto data

Above are mpg vs horsepower, weight, and acceleration, with a blue linear-regression line fit separately to each. Can we predict mpg using these three?

Maybe we can do better using a model:

mpg≈f(horsepower,weight,acceleration)

Notation

mpgis the response variable, the outcome variable, we refer to this as Yhorsepoweris a feature, input, predictor, we refer to this as X1weightis X2accelerationis X3

Notation

mpgis the response variable, the outcome variable, we refer to this as Yhorsepoweris a feature, input, predictor, we refer to this as X1weightis X2accelerationis X3 Our *input vector is

X=⎡⎢⎣X1X2X3⎤⎥⎦

Notation

mpgis the response variable, the outcome variable, we refer to this as Yhorsepoweris a feature, input, predictor, we refer to this as X1weightis X2accelerationis X3 Our *input vector is

X=⎡⎢⎣X1X2X3⎤⎥⎦

- Our model is

Y=f(X)+ϵ

- ϵ is our error

Why do we care about f(X)?

- We can use f(X) to make predictions of Y for new values of X=x

Why do we care about f(X)?

- We can use f(X) to make predictions of Y for new values of X=x* We can gain a better understanding of which components of X=(X1,X2,…,Xp) are important for explaining Y

Why do we care about f(X)?

- We can use f(X) to make predictions of Y for new values of X=x We can gain a better understanding of which components of X=(X1,X2,…,Xp) are important for explaining Y Depending on how complex f is, maybe we can understand how each component ( Xj ) of X affects Y

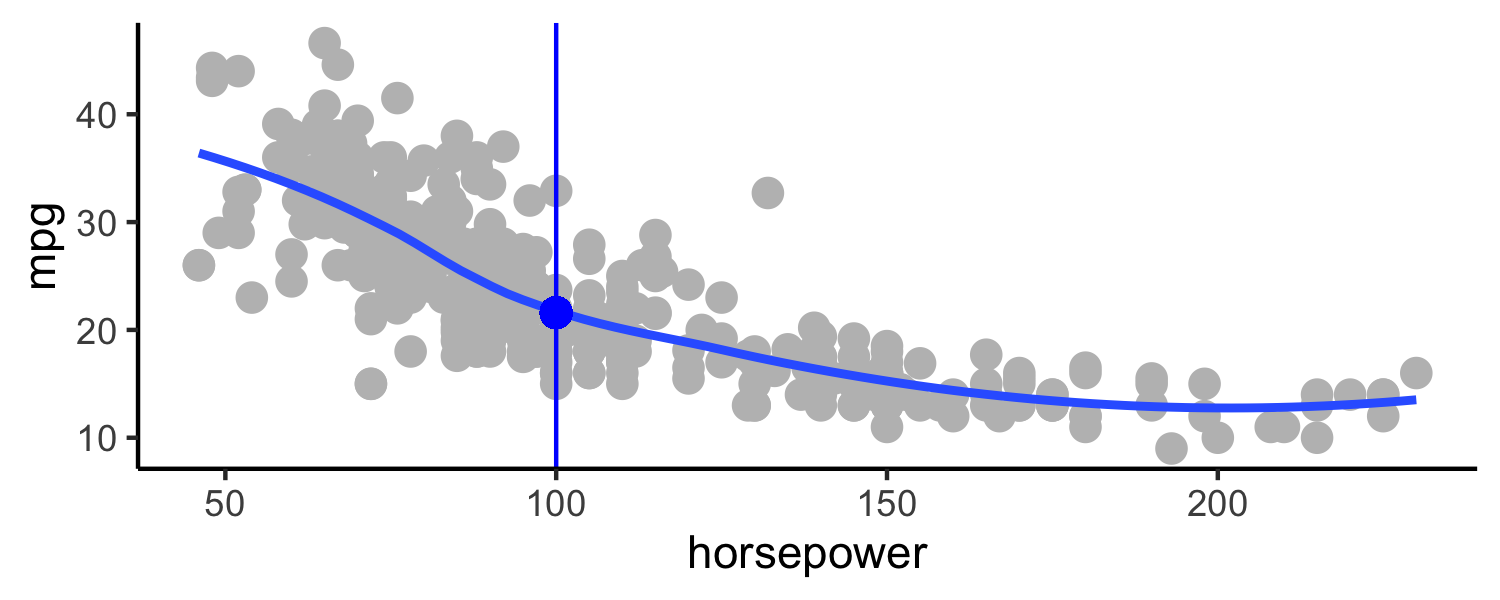

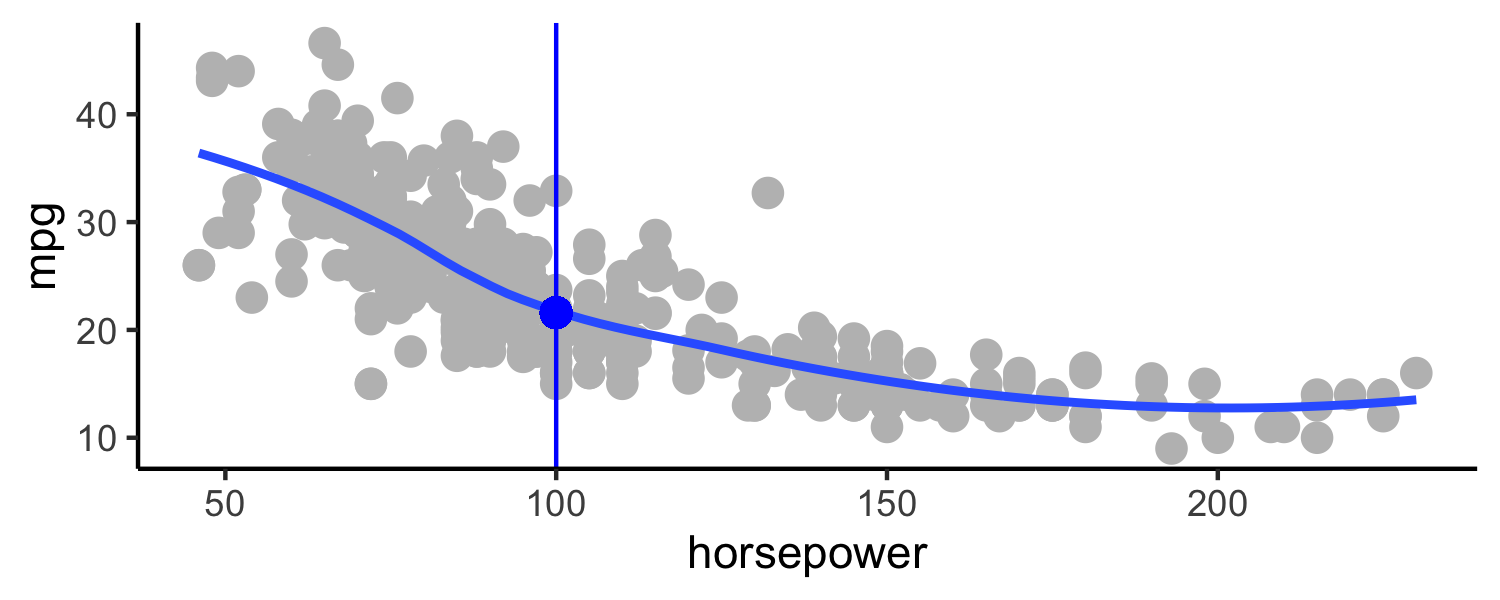

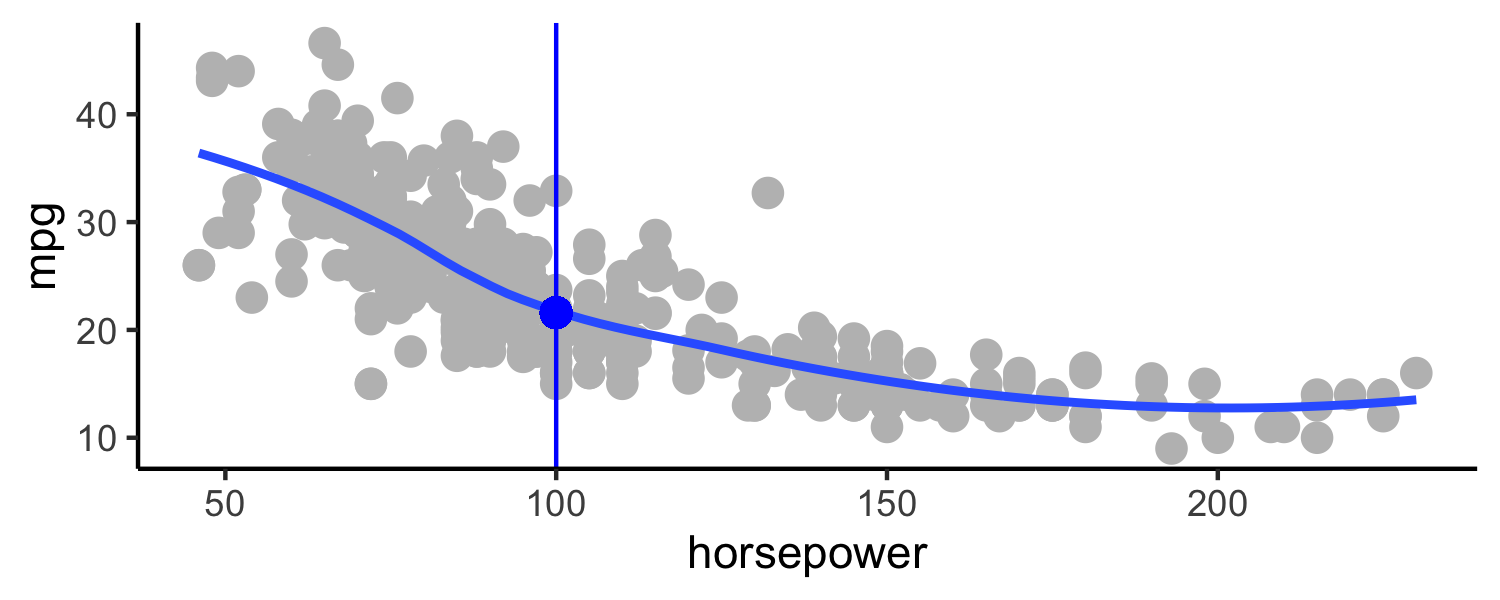

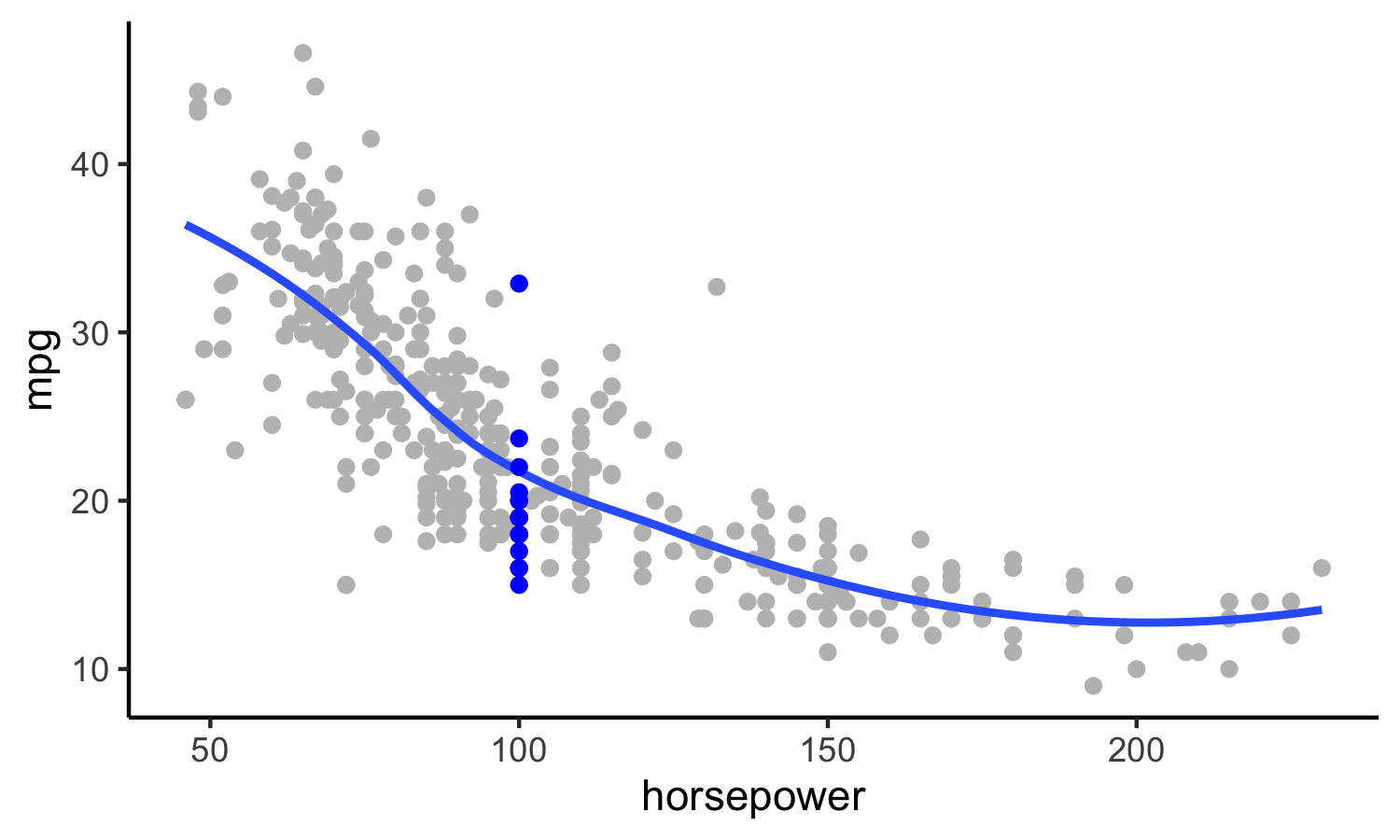

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

f(100)=E(Y|X=100)

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

f(100)=E(Y|X=100)

E(Y|X=100) means expected value (average) of Y given X=100

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

How do we choose f(X)? What is a good value for

f(X) at any selected value of X, say X=100? There can be many Y values at X=100.

A good value is

f(100)=E(Y|X=100)

E(Y|X=100) means expected value (average) of Y given X=100

This ideal f(x)=E(Y|X=x) is called the regression function

Regression function, f(X)

- Also works or a vector, X, for example,

f(x)=f(x1,x2,x3)=E[Y|X1=x1,X2=x2,X3=x3]

- This is the optimal predictor of Y in terms of mean-squared prediction error

Regression function, f(X)

- Also works or a vector, X, for example,

f(x)=f(x1,x2,x3)=E[Y|X1=x1,X2=x2,X3=x3]

- This is the optimal predictor of Y in terms of mean-squared prediction error

f(x)=E(Y|X=x) is the function that minimizes E[(Y−g(X))2|X=x] over all functions g at all points X=x

Regression function, f(X)

- Also works or a vector, X, for example,

f(x)=f(x1,x2,x3)=E[Y|X1=x1,X2=x2,X3=x3]

- This is the optimal predictor of Y in terms of mean-squared prediction error

f(x)=E(Y|X=x) is the function that minimizes E[(Y−g(X))2|X=x] over all functions g at all points X=x

- ϵ=Y−f(x) is the irreducible error

- even if we knew f(x), we would still make errors in prediction, since at each X=x there is typically a distribution of possible Y values

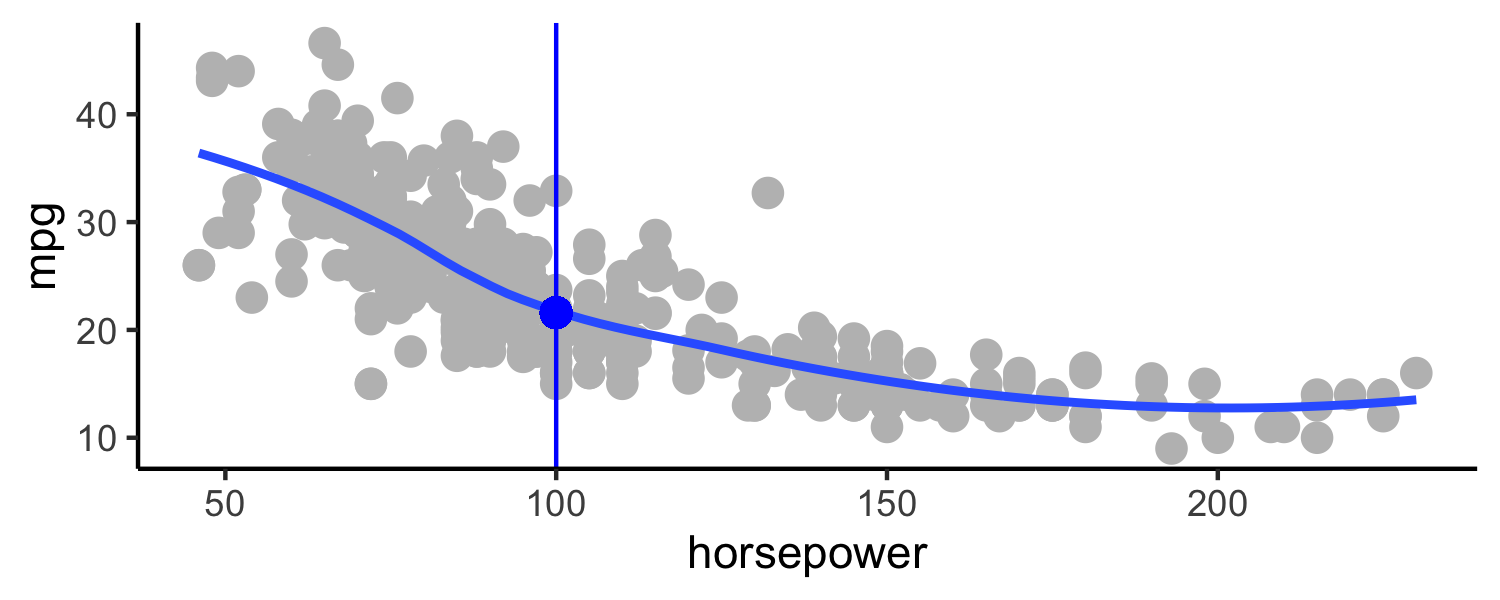

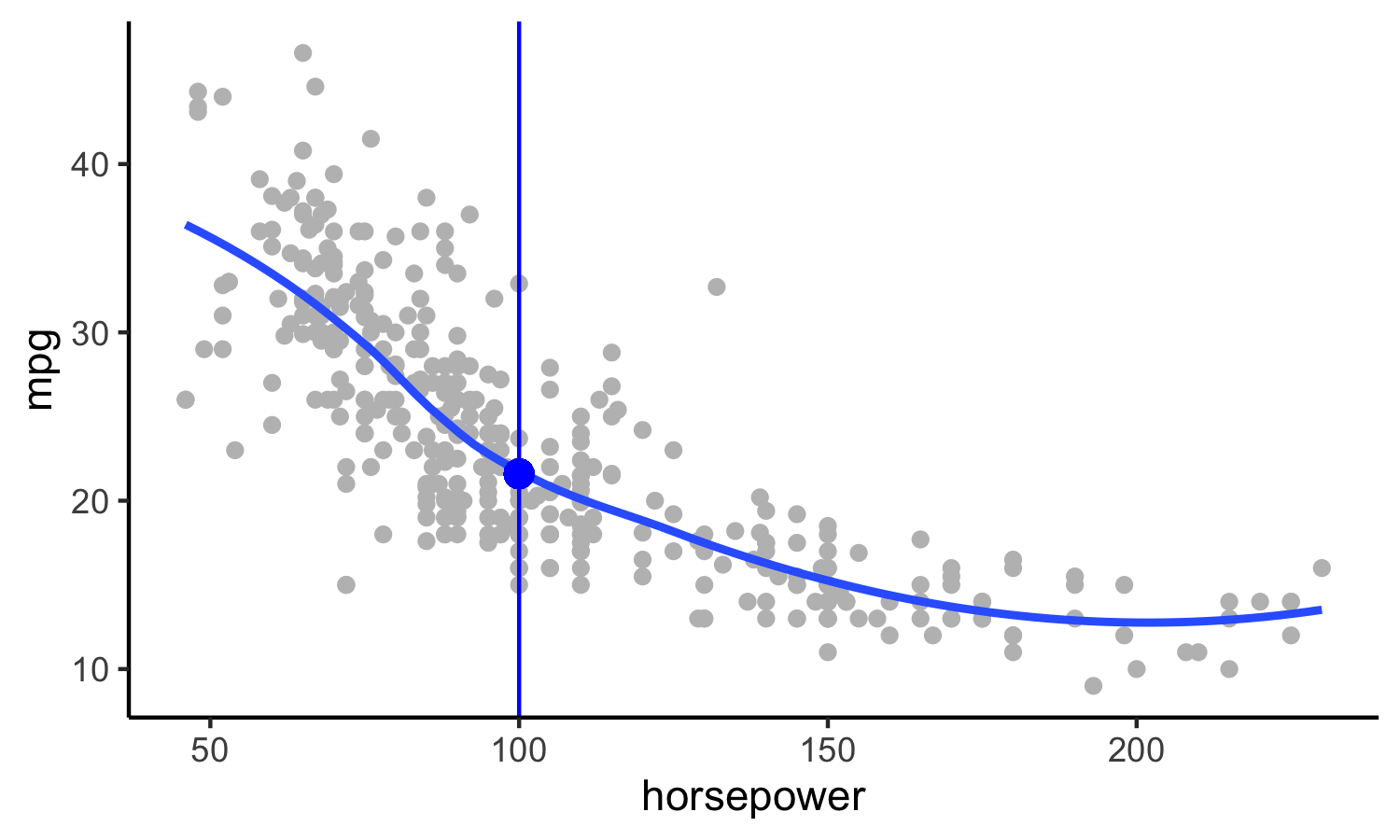

Using these points, how would I calculate the regression function?

Using these points, how would I calculate the regression function?

- Take the average! f(100)=E[mpg|horsepower=100]=19.6

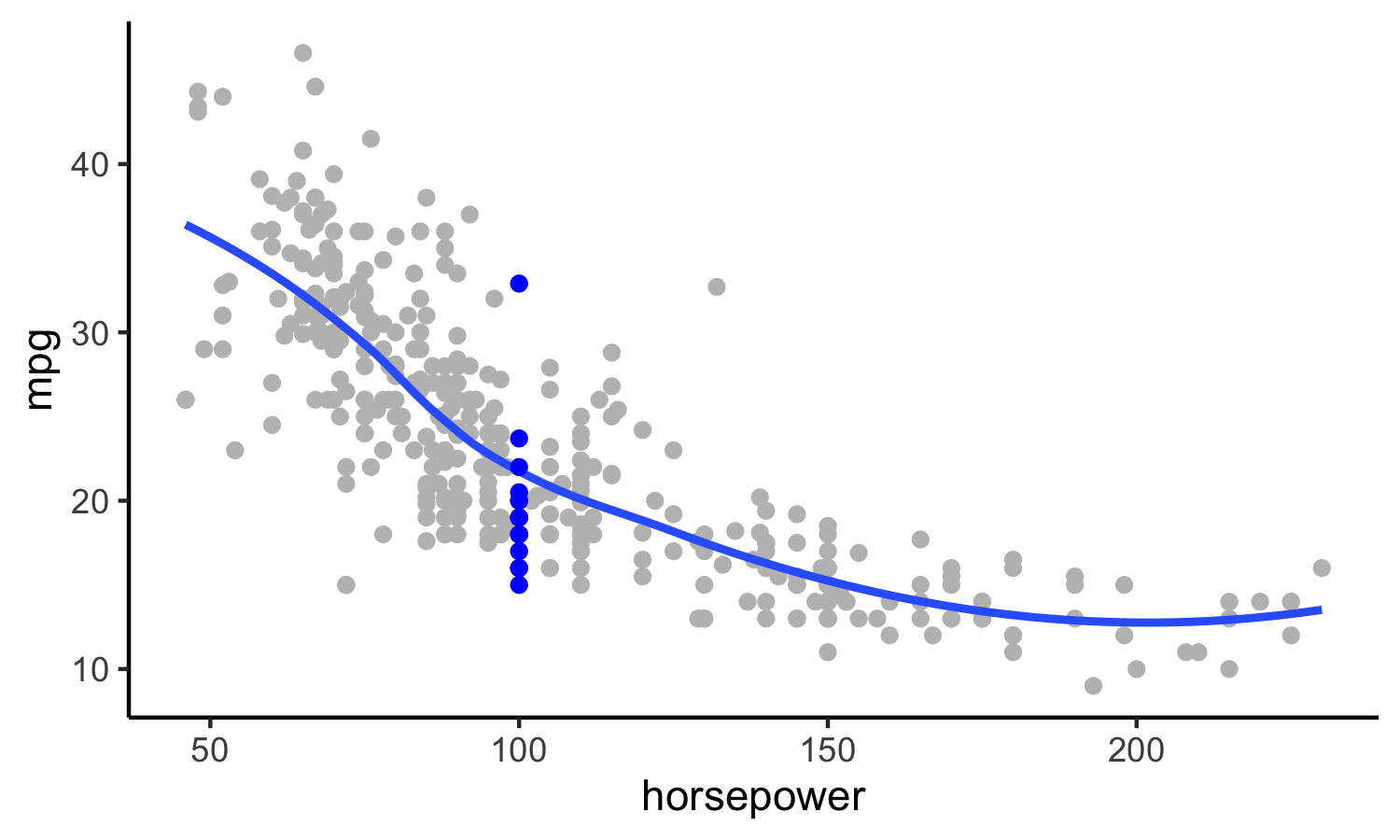

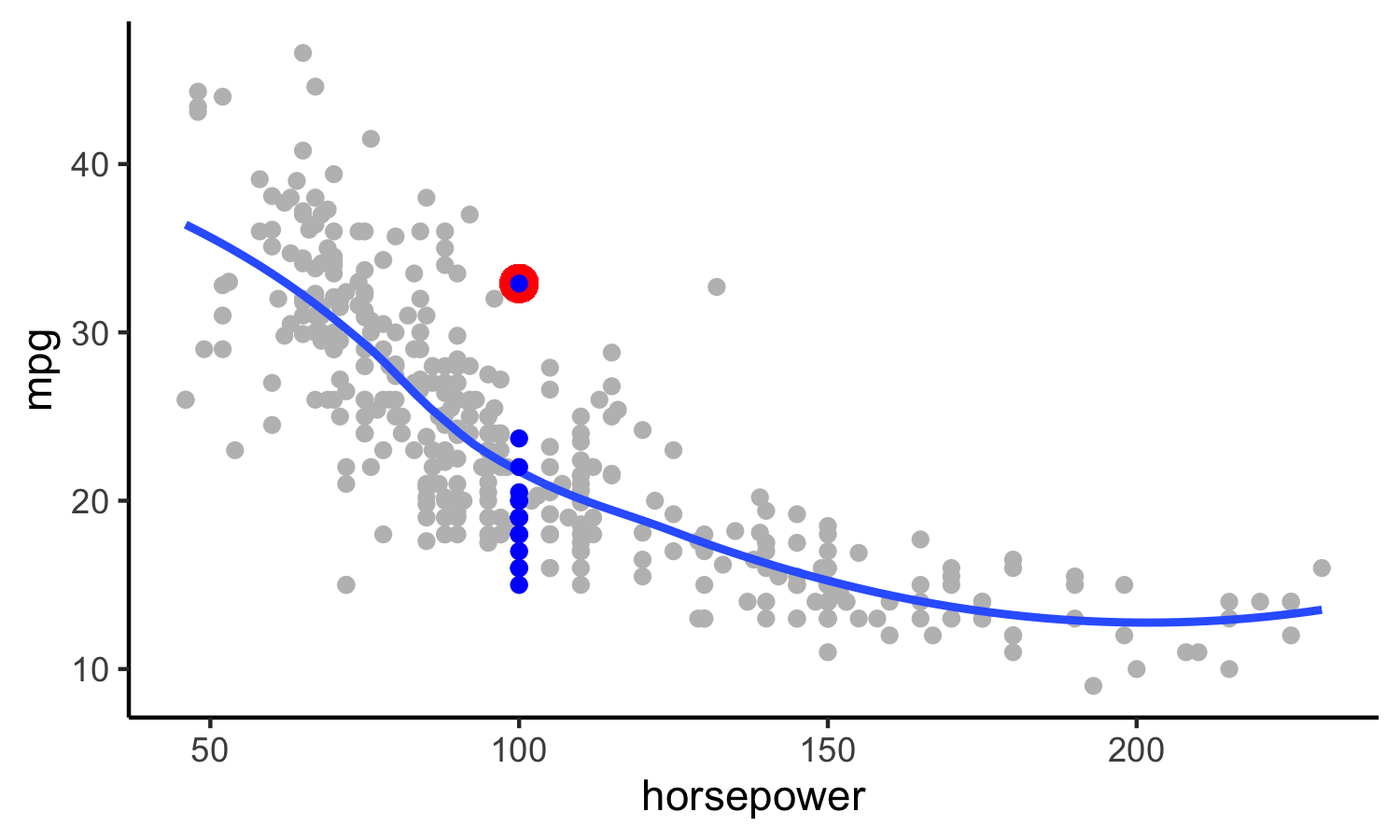

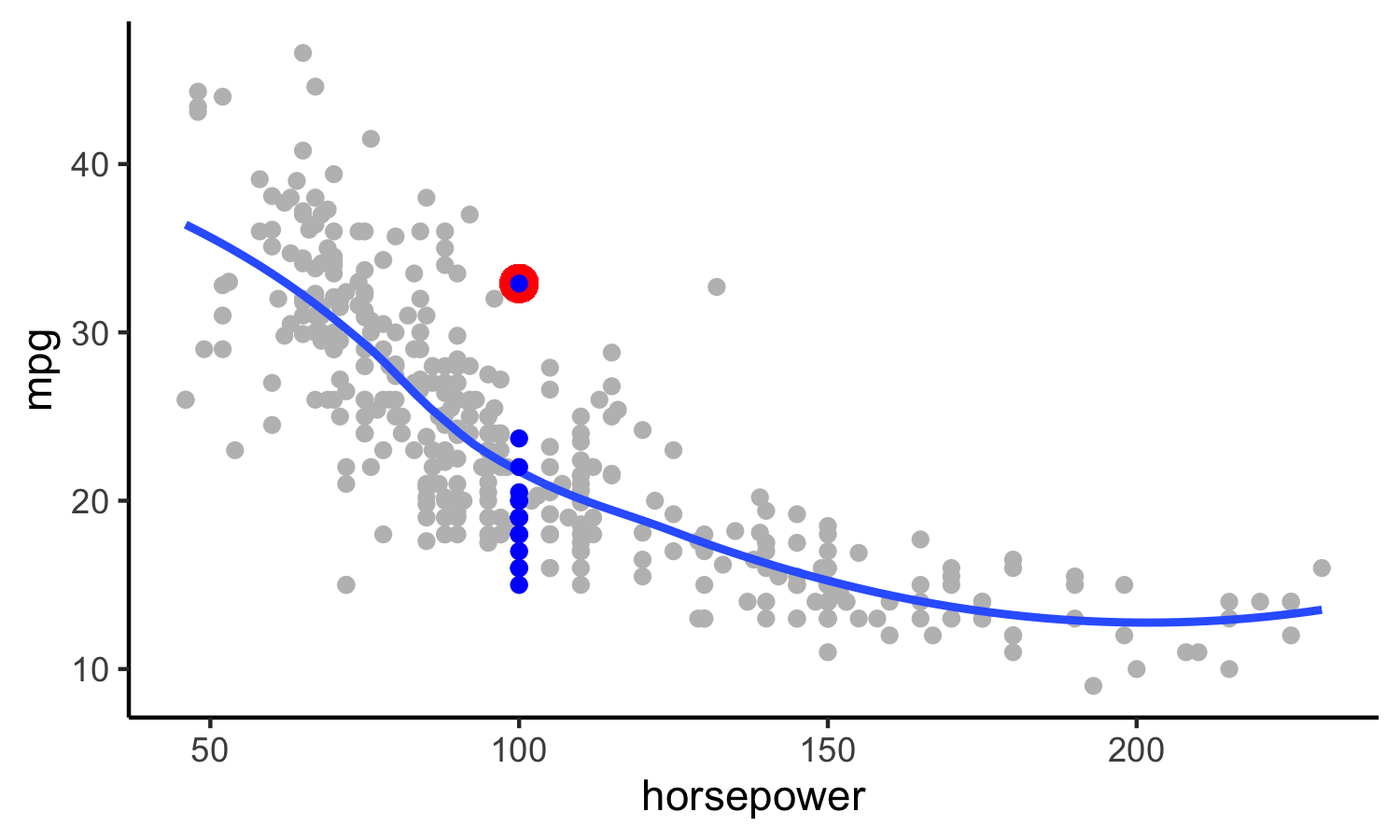

This point has a Y value of 32.9. What is ϵ?

This point has a Y value of 32.9. What is ϵ?

- ϵ=Y−f(X)=32.9−19.6=13.3

The error

For any estimate, ^f(x), of f(x), we have

E[(Y−^f(x))2|X=x]=[f(x)−^f(x)]2reducible error+Var(ϵ)irreducible error

- Assume for a moment that both ^f and X are fixed.

- E(Y−^Y)2 represents the average, or expected value, of the squared difference between the predicted and actual value of Y, and Var( ϵ ) represents the variance associated with the error term

- The focus of this class is on techniques for estimating f with the aim of minimizing the reducible error.

- the irreducible error will always provide an upper bound on the accuracy of our prediction for Y

- This bound is almost always unknown in practice

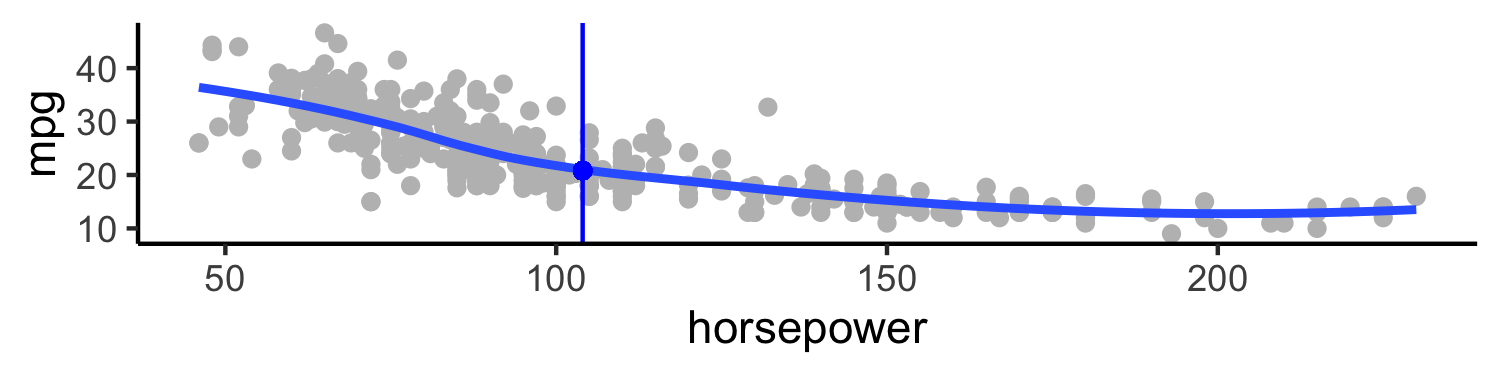

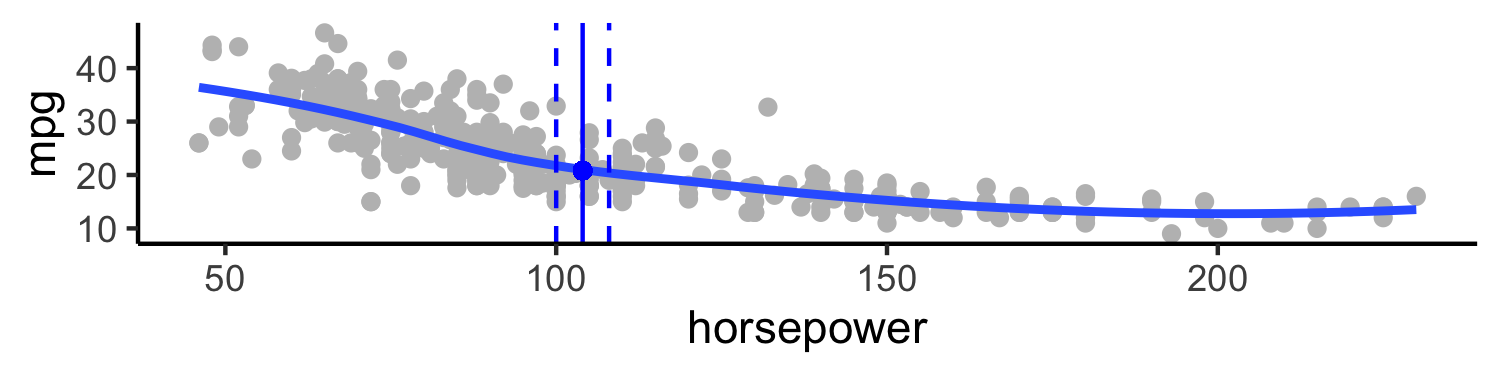

Estimating f

- Typically we have very few (if any!) data points at X=x exactly, so we cannot compute E[Y|X=x]

Estimating f

- Typically we have very few (if any!) data points at X=x exactly, so we cannot compute E[Y|X=x]* For example, what if we were interested in estimating miles per gallon when horsepower was 104.

Estimating f

- Typically we have very few (if any!) data points at X=x exactly, so we cannot compute E[Y|X=x]* For example, what if we were interested in estimating miles per gallon when horsepower was 104.

💡 We can relax the definition and let

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

Estimating f

- Typically we have very few (if any!) data points at X=x exactly, so we cannot compute E[Y|X=x]

- For example, what if we were interested in estimating miles per gallon when horsepower was 104.

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

- Where N(x) is some neighborhood of x

Notation pause!

^f(x)=EThe expectation[Yof Y|givenX∈N(x)X is in the neighborhood of x]

Notation pause!

^f(x)=EThe expectation[Yof Y|givenX∈N(x)X is in the neighborhood of x]

If you need a notation pause at any point during this class, please let me know!

Estimating f

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

Estimating f

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

- Nearest neighbor averaging does pretty well with small p ( p≤4 ) and large n

Estimating f

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

- Nearest neighbor averaging does pretty well with small p ( p≤4 ) and large n Nearest neighbor is not great when p is large because of the *curse of dimensionality (because nearest neighbors tend to be far away in high dimensions)

Estimating f

💡 We can relax the definition and let

^f(x)=E[Y|X∈N(x)]

- Nearest neighbor averaging does pretty well with small p ( p≤4 ) and large n Nearest neighbor is not great when p is large because of the *curse of dimensionality (because nearest neighbors tend to be far away in high dimensions)

What do I mean by p? What do I mean by n?

Parametric models

A common parametric model is a linear model

f(X)=β0+β1X1+β2X2+⋯+βpXp

Parametric models

A common parametric model is a linear model

f(X)=β0+β1X1+β2X2+⋯+βpXp

- A linear model has p+1 parameters ( β0,…,βp )

Parametric models

A common parametric model is a linear model

f(X)=β0+β1X1+β2X2+⋯+βpXp

- A linear model has p+1 parameters ( β0,…,βp ) We estimate these parameters by fitting a model to *training data

Parametric models

A common parametric model is a linear model

f(X)=β0+β1X1+β2X2+⋯+βpXp

- A linear model has p+1 parameters ( β0,…,βp ) We estimate these parameters by fitting a model to training data Although this model is almost never correct it can often be a good interpretable approximation to the unknown true function, f(X)

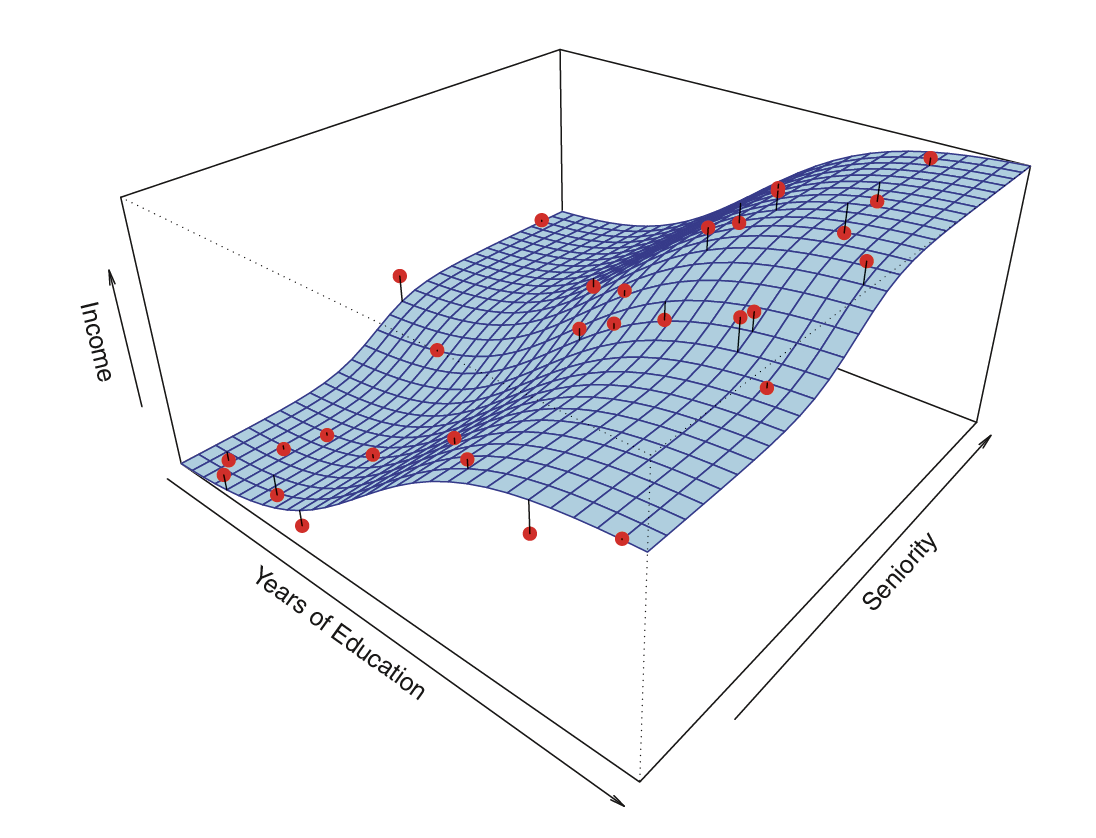

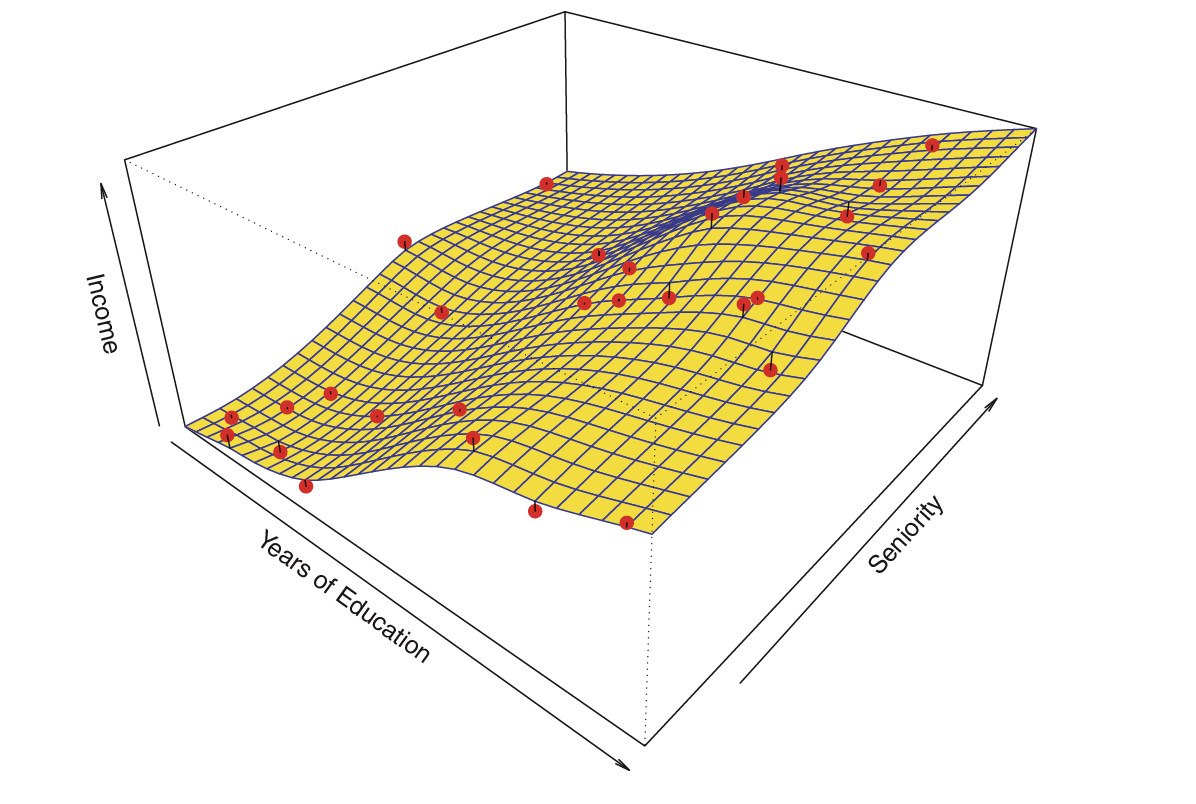

Let's look at a simulated example

- The red points are simulated values for

incomefrom the model:

income=f(education, senority)+ϵ

- f is the blue surface

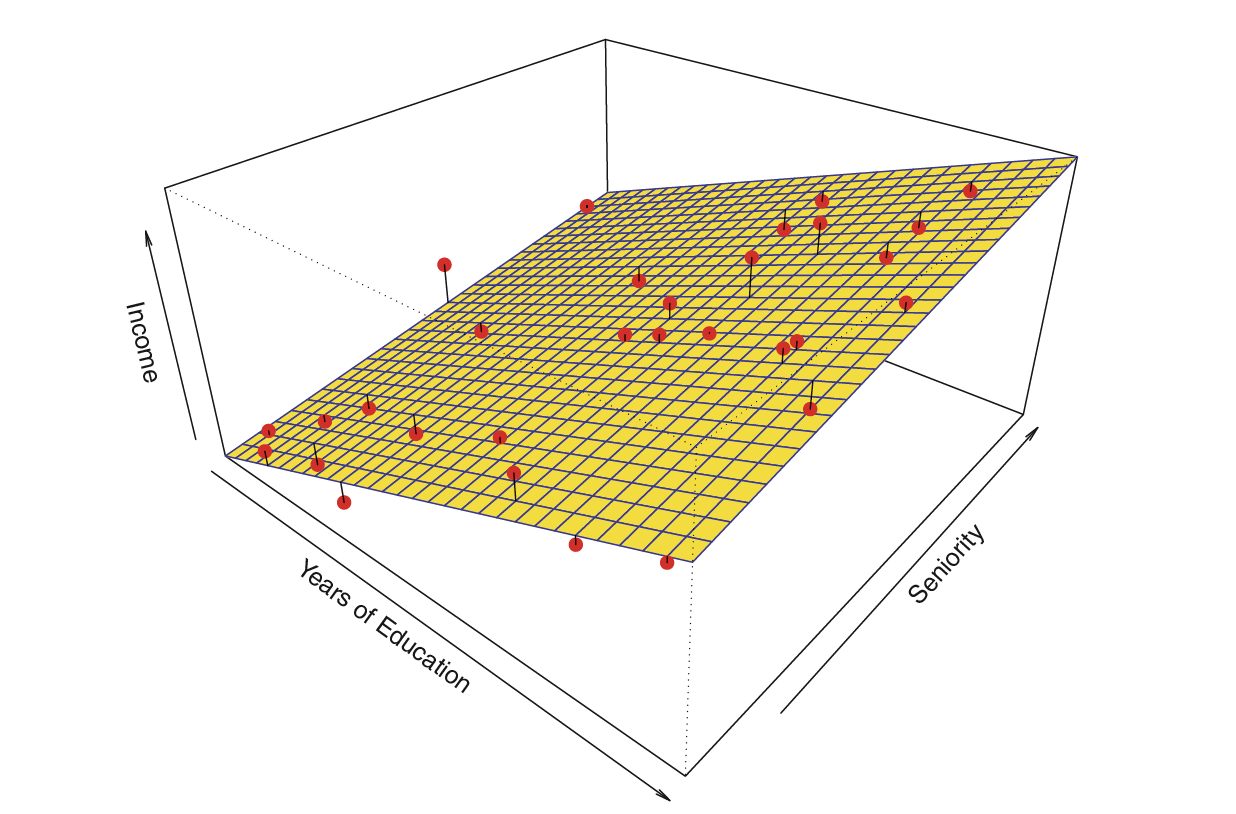

Linear regression model fit to the simulated data

^fL(education, senority)=^β0+^β1education+^β2senority

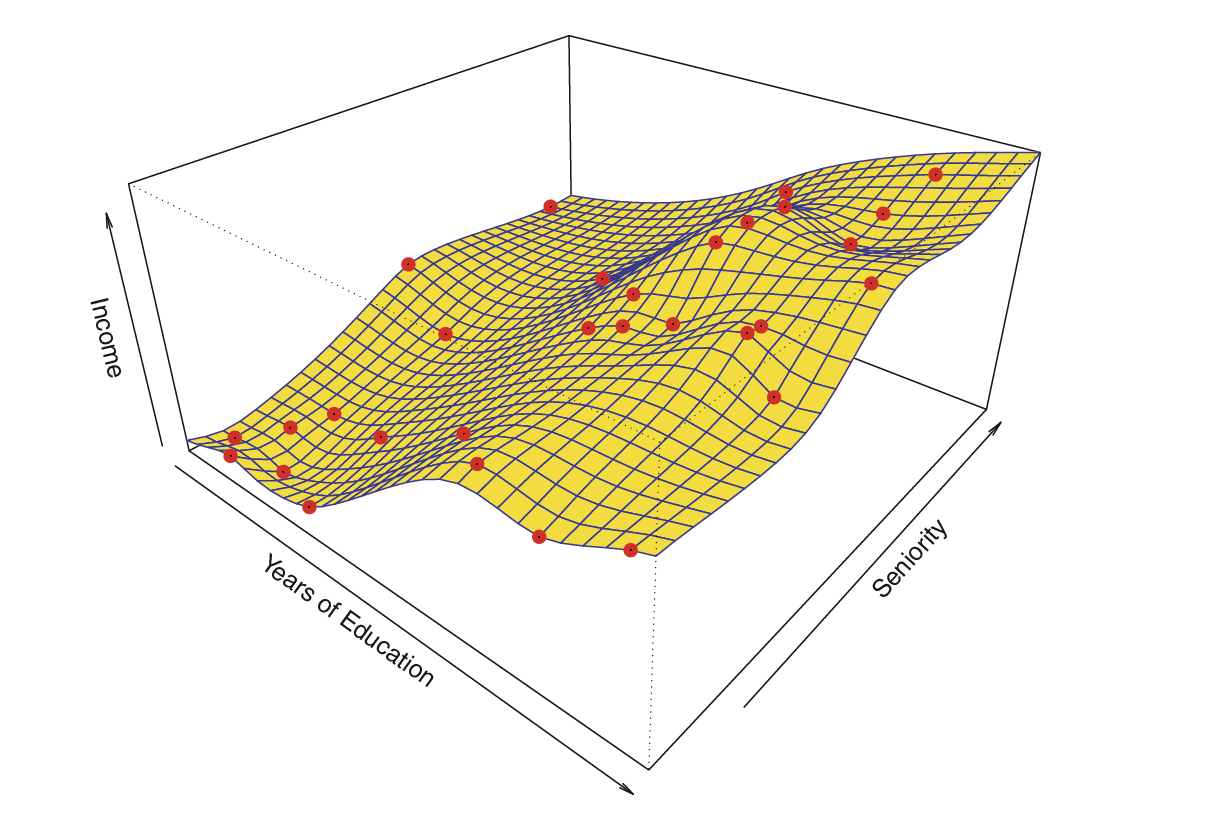

- More flexible regression model ^fS(education, seniority) fit to the simulated data

- Here we use a technique called a thin-plate spline to fit a flexible surface

And even MORE flexible 😱 model ^f(education, seniority)

- Here we've basically drawn the surface to hit every point, minimizing the error, but completely overfitting

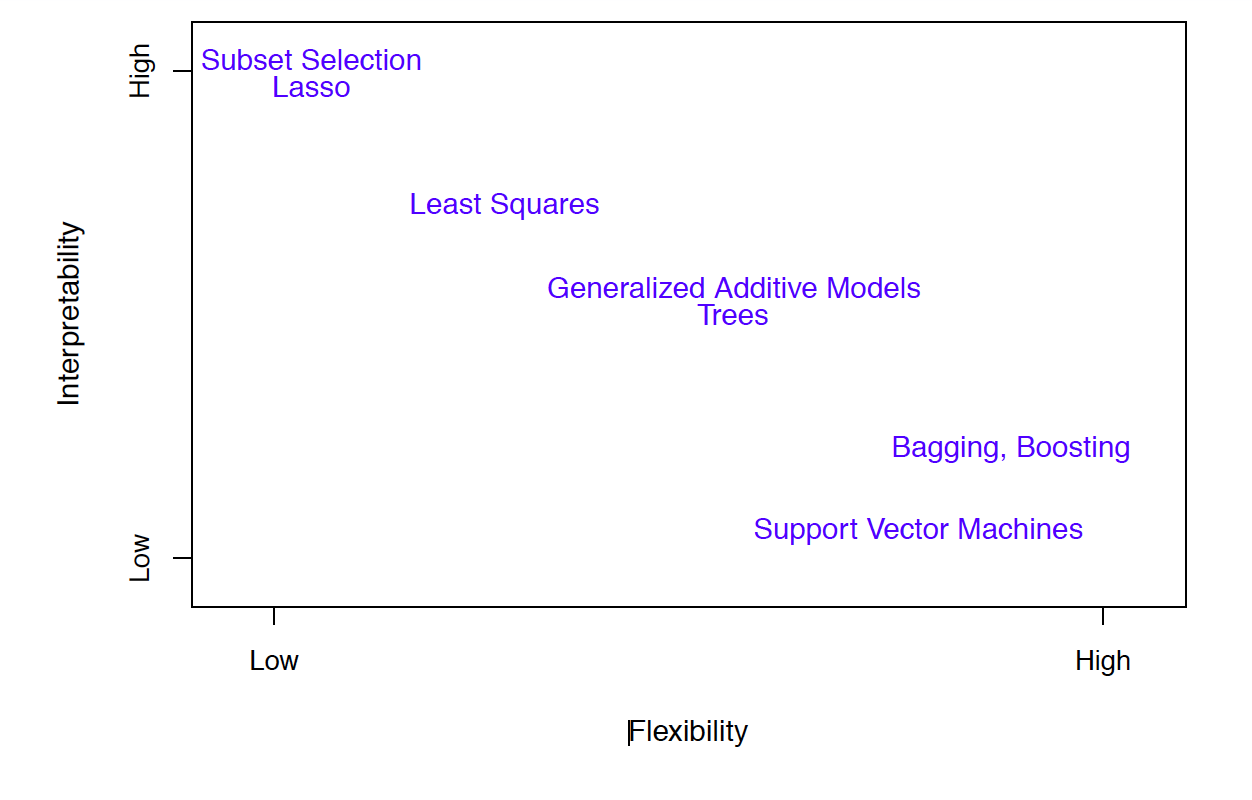

🤹 Finding balance

- Prediction accuracy versus interpretability

- Linear models are easy to interpret, thin-plate splines are not

🤹 Finding balance

- Prediction accuracy versus interpretability

- Linear models are easy to interpret, thin-plate splines are not Good fit versus overfit or *underfit

- How do we know when the fit is just right?

🤹 Finding balance

- Prediction accuracy versus interpretability

- Linear models are easy to interpret, thin-plate splines are not Good fit versus overfit or *underfit

- How do we know when the fit is just right? Parsimony versus *black-box

- We often prefer a simpler model involving fewer variables over a black-box predictor involving them all

Accuracy

- We've fit a model ^f(x) to some training data train={xi,yi}N1

- We can measure accuracy as the average squared prediction error over that

traindata

MSEtrain=Avei∈train[yi−^f(xi)]2

Accuracy

- We've fit a model ^f(x) to some training data train={xi,yi}N1

- We can measure accuracy as the average squared prediction error over that

traindata

MSEtrain=Avei∈train[yi−^f(xi)]2

What can go wrong here?

Accuracy

- We've fit a model ^f(x) to some training data train={xi,yi}N1

- We can measure accuracy as the average squared prediction error over that

traindata

MSEtrain=Avei∈train[yi−^f(xi)]2

What can go wrong here?

- This may be biased towards overfit models

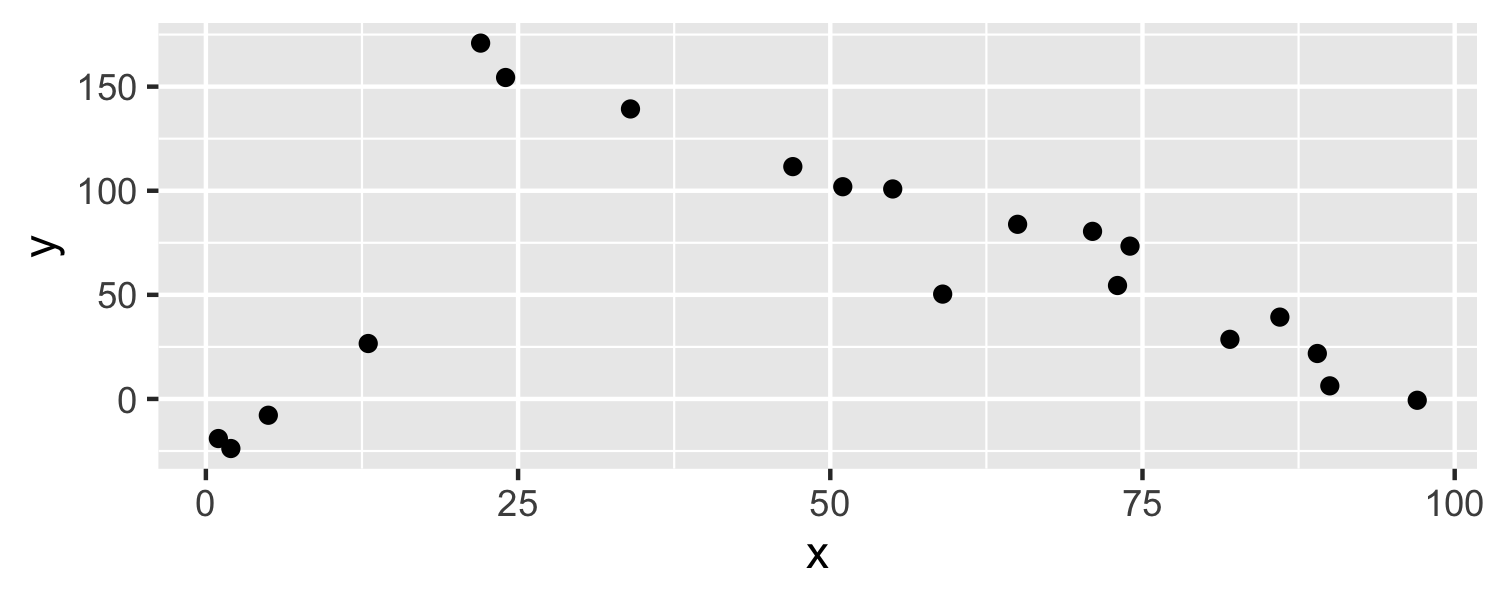

Accuracy

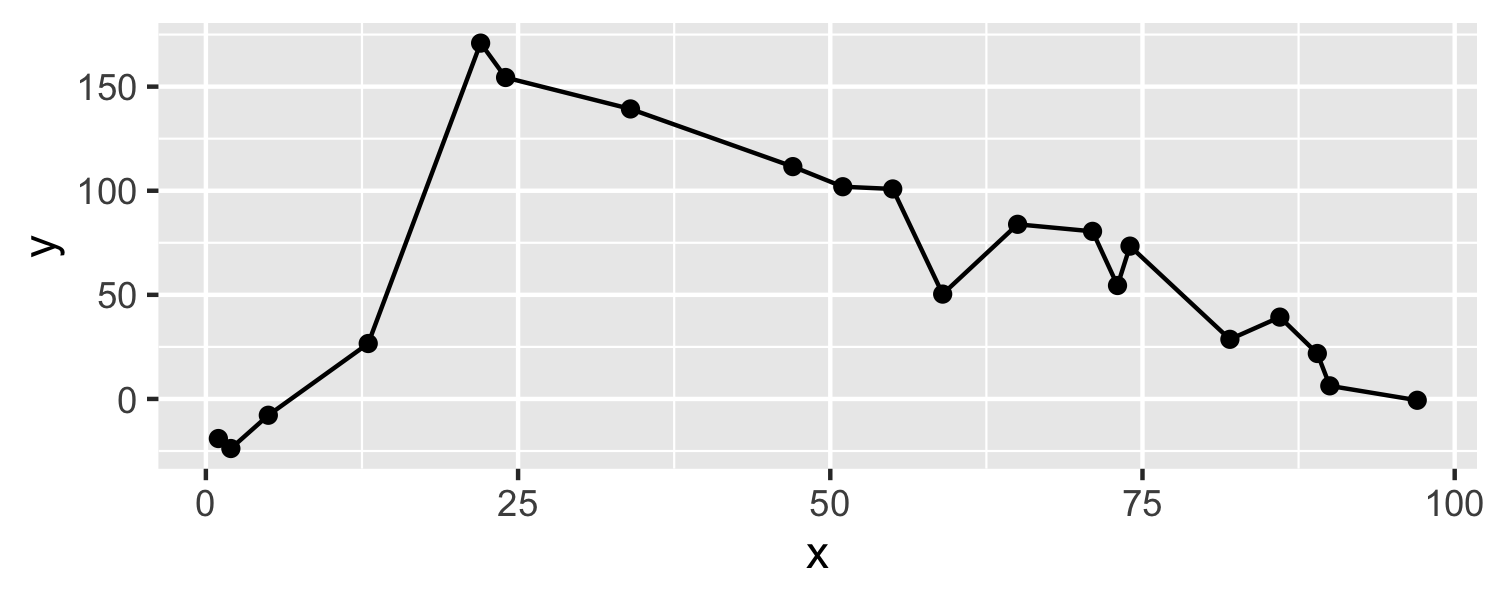

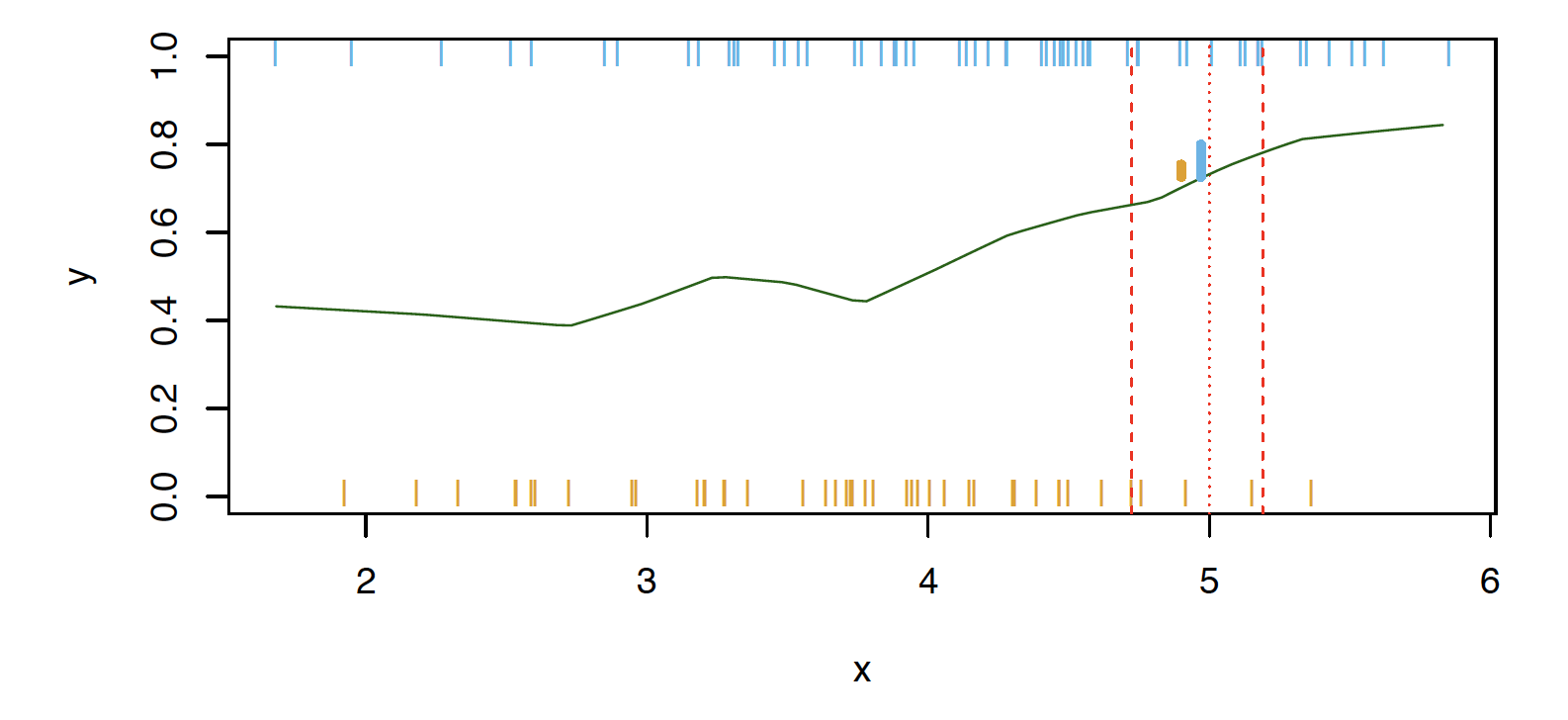

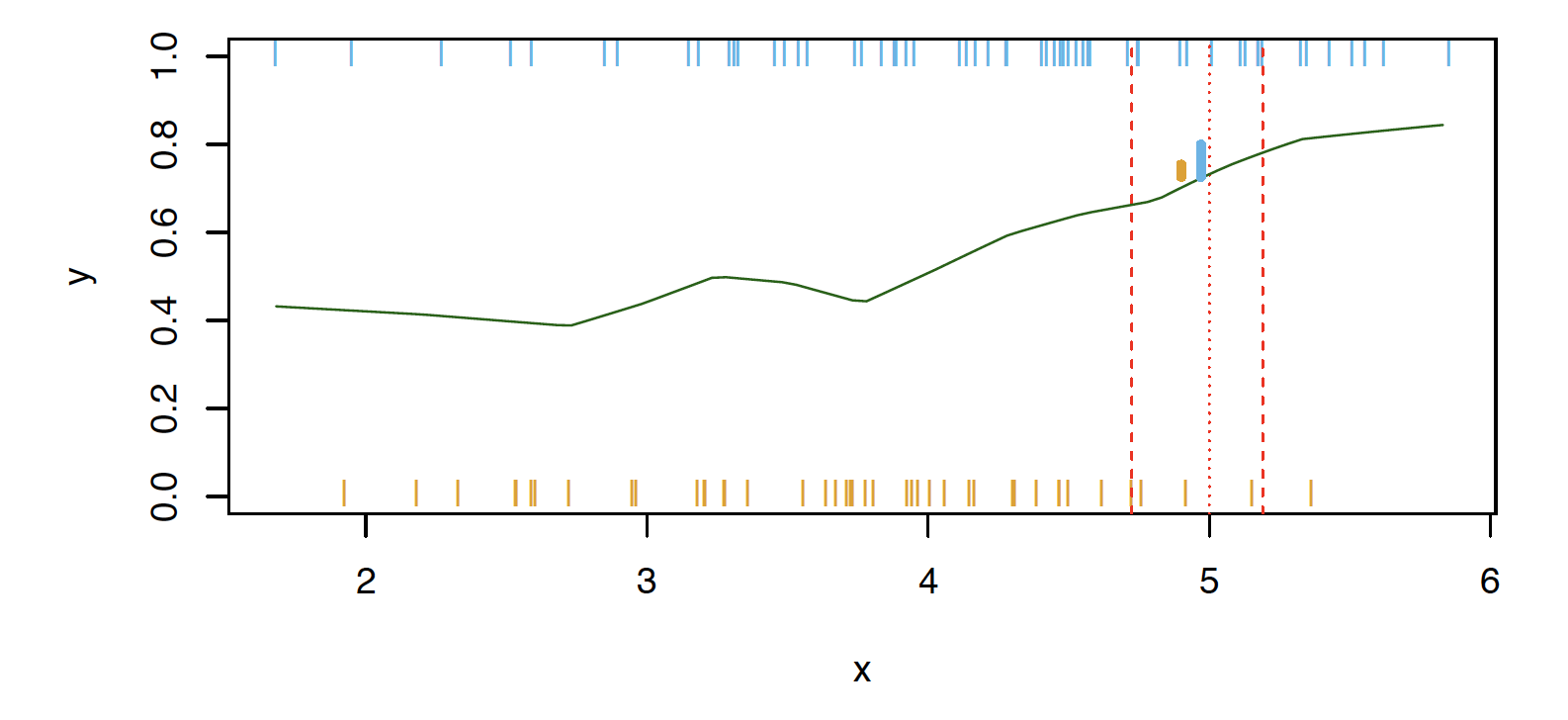

I have some train data, plotted above. What ^f(x) would minimize the MSEtrain?

MSEtrain=Avei∈train[yi−^f(xi)]2

Accuracy

I have some train data, plotted above. What ^f(x) would minimize the MSEtrain?

MSEtrain=Avei∈train[yi−^f(xi)]2

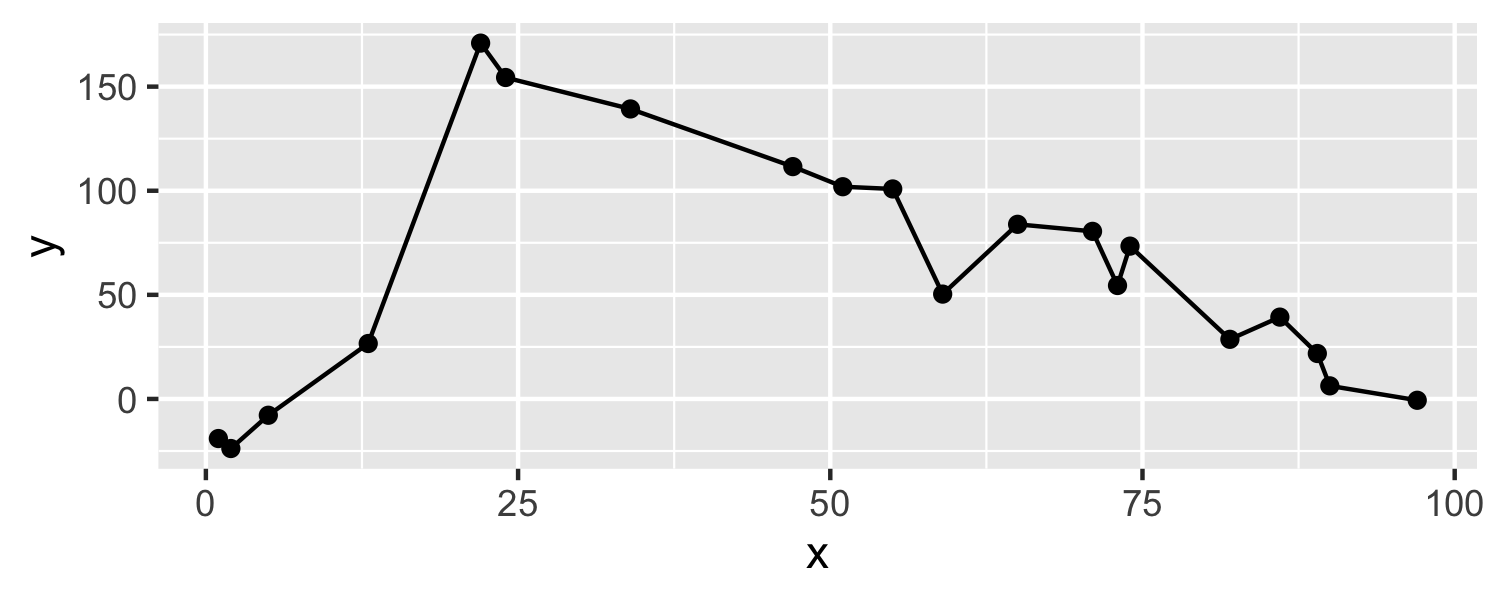

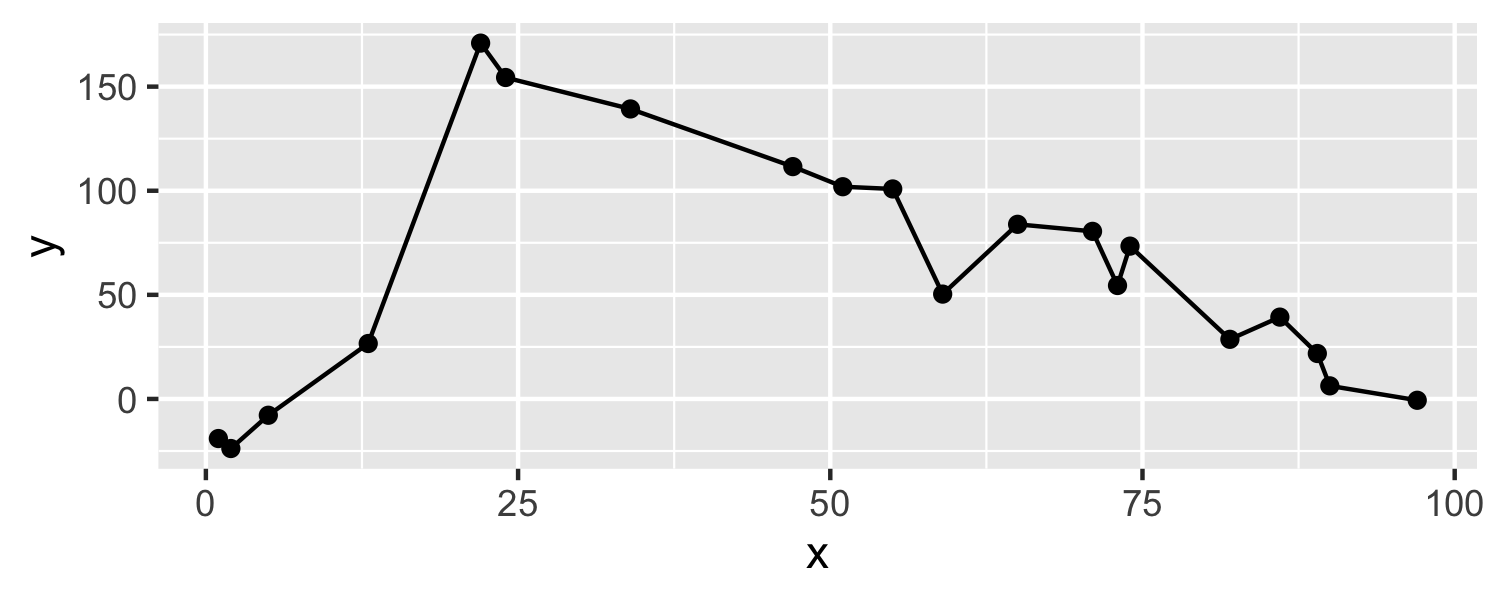

Accuracy

What is wrong with this?

Accuracy

What is wrong with this?

It's overfit!

Accuracy

If we get a new sample, that overfit model is probably going to be terrible!

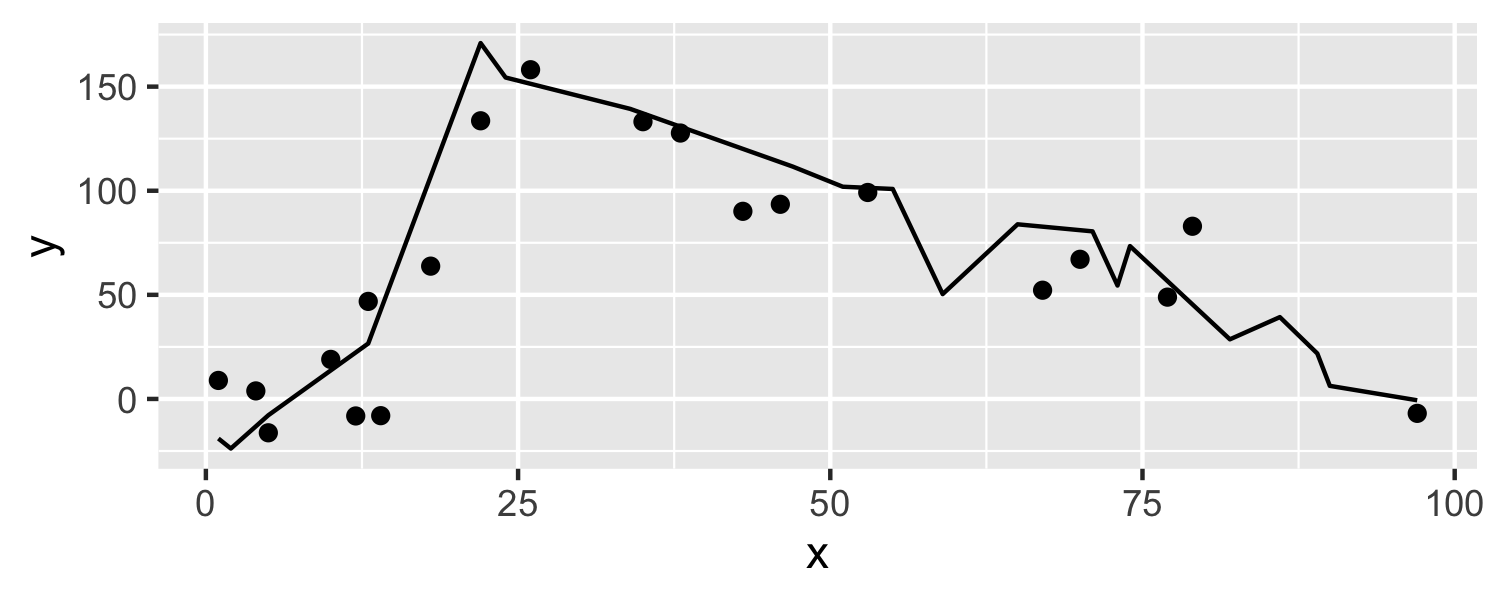

Accuracy

- We've fit a model ^f(x) to some training data train={xi,yi}N1

- Instead of measuring accuracy as the average squared prediction error over that

traindata, we can compute it using freshtestdata test={xi,yi}M1

MSEtest=Avei∈test[yi−^f(xi)]2

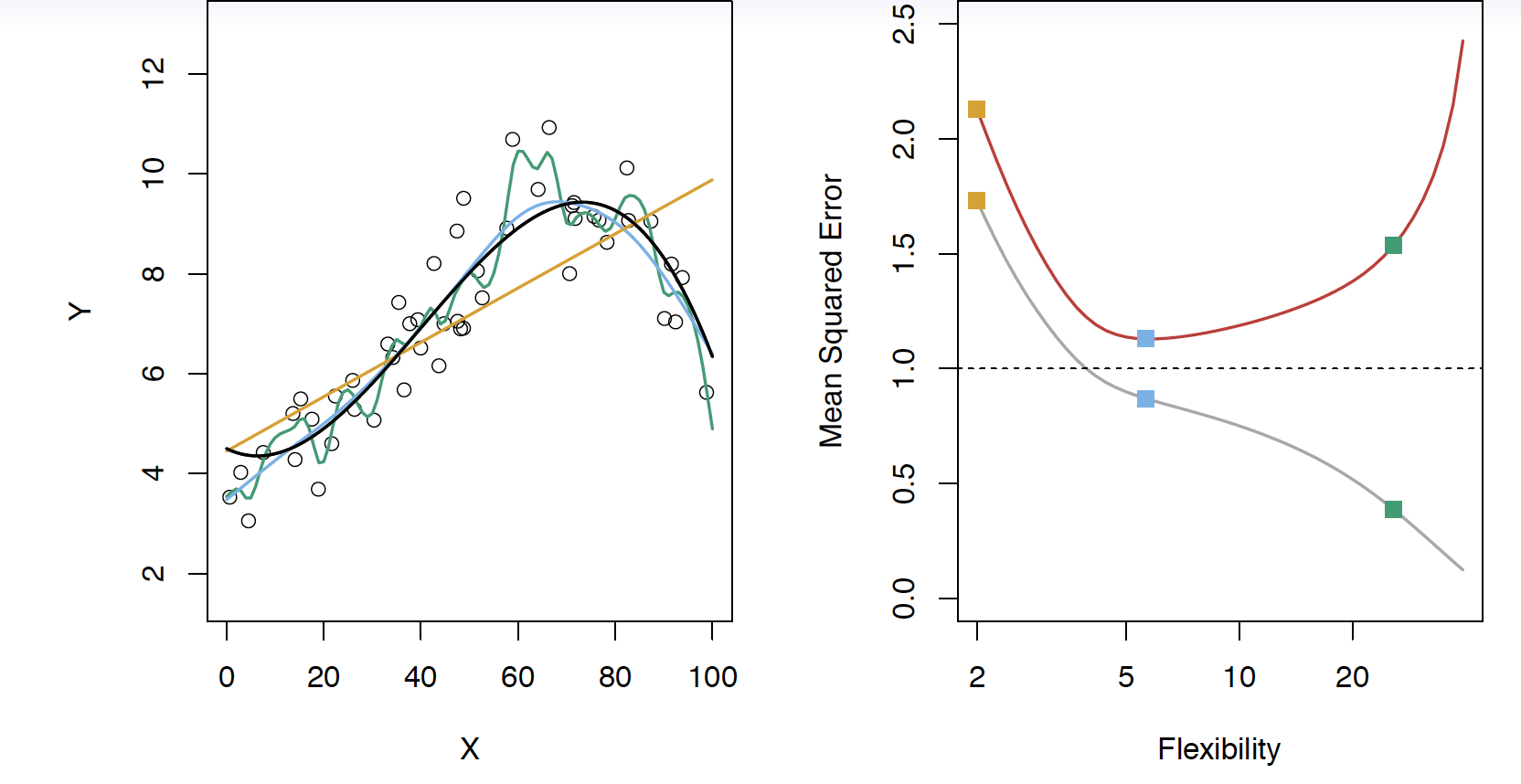

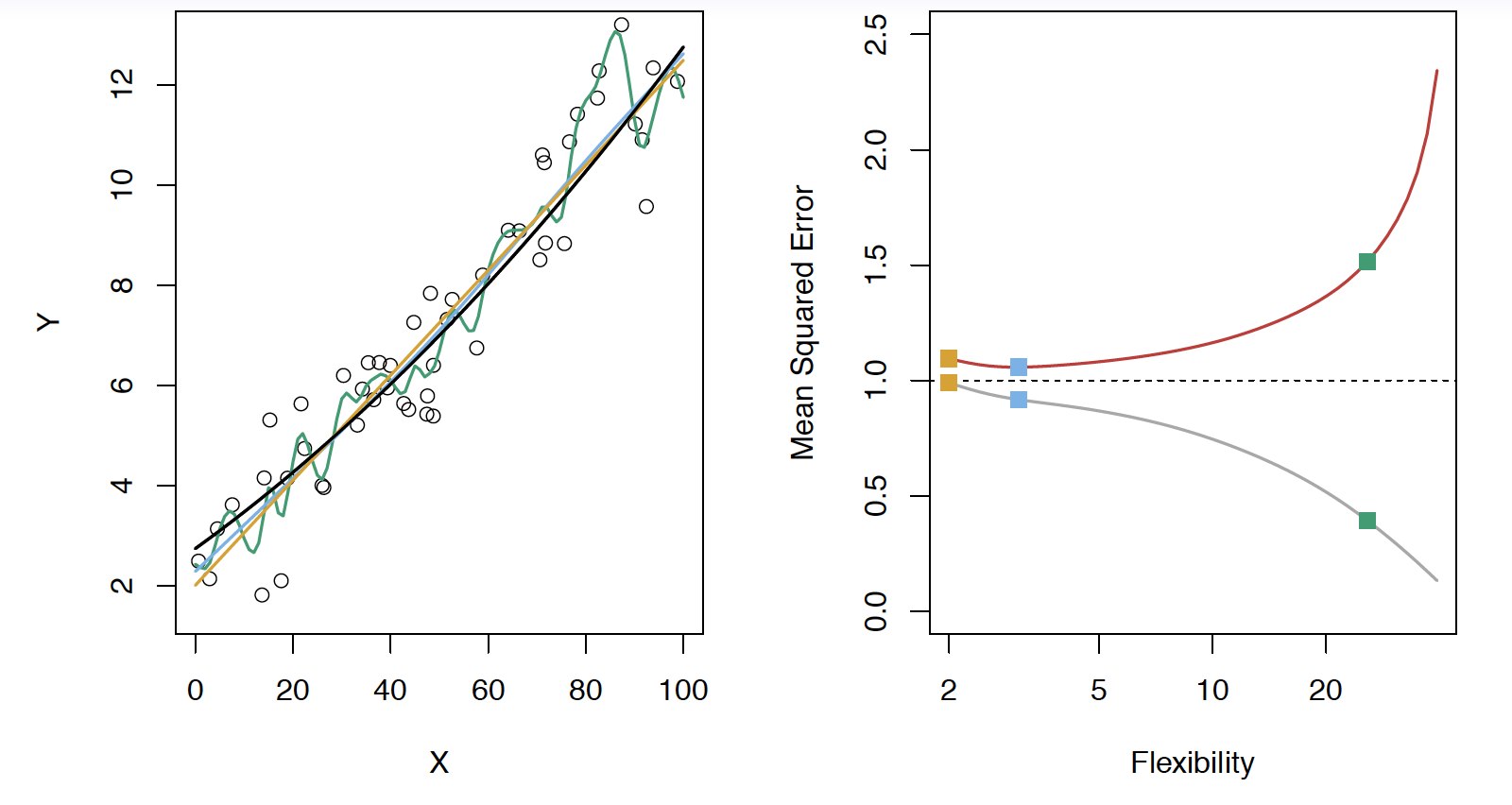

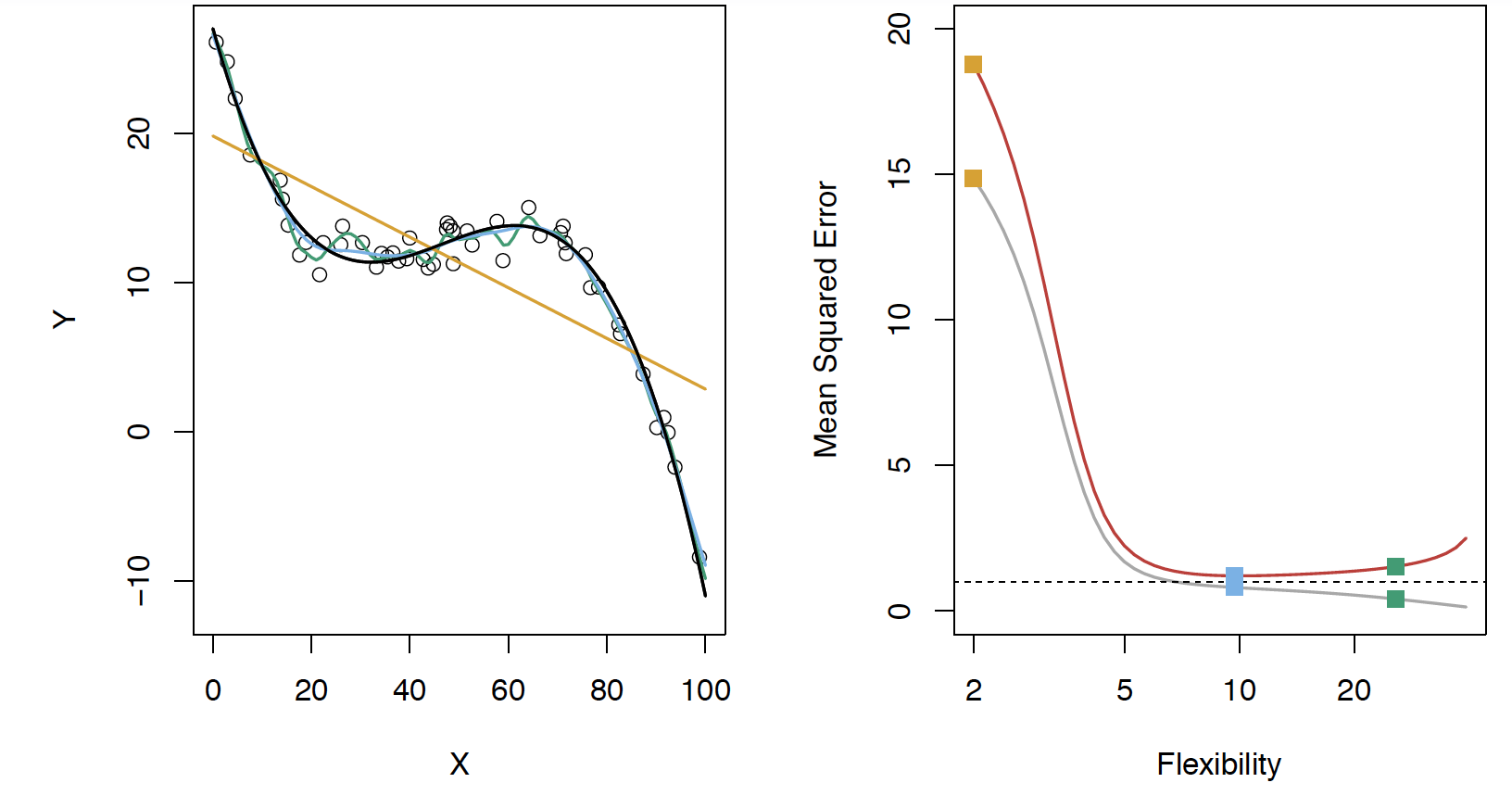

Black curve is the "truth" on the left. Red curve on right is MSEtest, grey curve is MSEtrain. Orange, blue and green curves/squares correspond to fis of different flexibility.

Here the truth is smoother, so the smoother fit and linear model do really well

Here the truth is wiggly and the noise is low, so the more flexible fits do the best

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data* Let's pull a test observation from this population ( x0,y0 )

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data Let's pull a test observation from this population ( x0,y0 ) The true model is Y=f(x)+ϵ

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data Let's pull a test observation from this population ( x0,y0 ) The true model is Y=f(x)+ϵ* f(x)=E[Y|X=x]

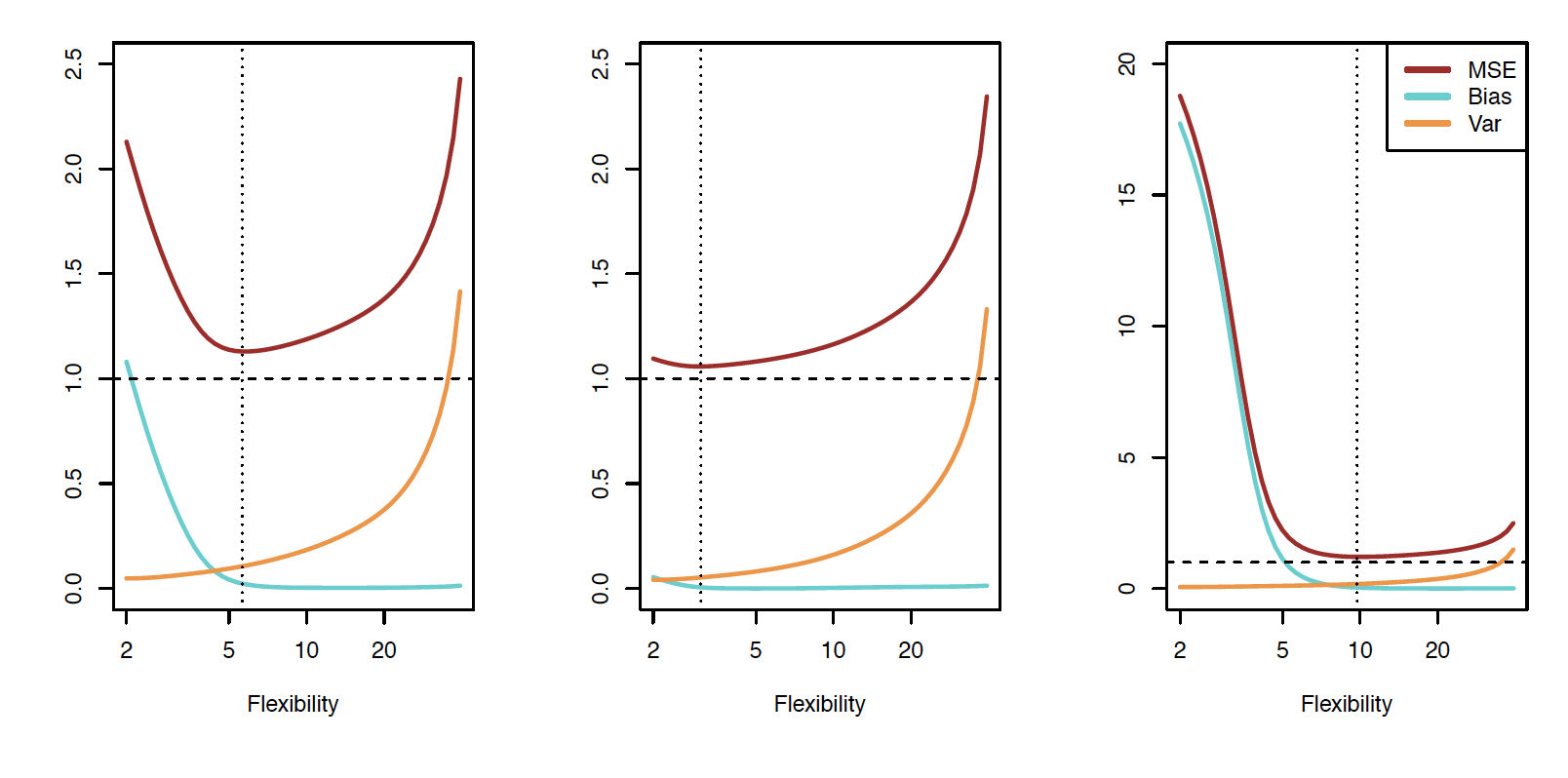

E(y0−^f(x0))2=Var(^f(x0))+[Bias(^f(x0))]2+Var(ϵ)

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data Let's pull a test observation from this population ( x0,y0 ) The true model is Y=f(x)+ϵ* f(x)=E[Y|X=x]

E(y0−^f(x0))2=Var(^f(x0))+[Bias(^f(x0))]2+Var(ϵ)

The expectation averages over the variability of y0 as well as the variability of the training data. Bias(^f(x0))=E[^f(x0)]−f(x0)

- As flexibility of ^f ↑, its variance ↑ and its bias ↓

Bias-variance trade-off

- We've fit a model, ^f(x), to some training data Let's pull a test observation from this population ( x0,y0 ) The true model is Y=f(x)+ϵ* f(x)=E[Y|X=x]

E(y0−^f(x0))2=Var(^f(x0))+[Bias(^f(x0))]2+Var(ϵ)

The expectation averages over the variability of y0 as well as the variability of the training data. Bias(^f(x0))=E[^f(x0)]−f(x0)

- As flexibility of ^f ↑, its variance ↑ and its bias ↓ choosing the flexibility based on average test error amounts to a *bias-variance trade-off

- That U-shape we see for the test MSE curves is due to this bias-variance trade-off

- The expected test MSE for a given x0 can be decomposed into three components: the variance of ^f(xo), the squared bias of ^f(xo) and t4he variance of the error term ϵ

- Here the notation E[y0−^f(x0)]2 defines the expected test MSE, and refers to the average test MSE that we would obtain if we repeatedly estimated f using a large number of training sets, and tested each at x0

- The overall expected test MSE can be computed by averaging E[y0−^f(x0)]2 over all possible values of x0 in the test set.

- SO we want to minimize the expected test error, so to do that we need to pick a statistical learning method to simultenously acheive low bias and low variance.

- Since both of these quantities are non-negative, the expected test MSE can never fall below Var( ϵ )

Bias-variance trade-off

Classification

Notation

- Y is the response variable. It is qualitative

- C(X) is the classifier that assigns a class C to some future unlabeled observation, X

Notation

- Y is the response variable. It is qualitative

- C(X) is the classifier that assigns a class C to some future unlabeled observation, X* Examples:

- Email can be classified as C=(spam, not spam)

- Written number is one of C={0,1,2,…,9}

Classification Problem

What is the goal?

Classification Problem

What is the goal?

- Build a classifier C(X) that assigns a class label from C to a future unlabeled observation X

- Assess the uncertainty in each classification

- Understand the roles of the different predictors among X=(X1,X2,…,Xp)

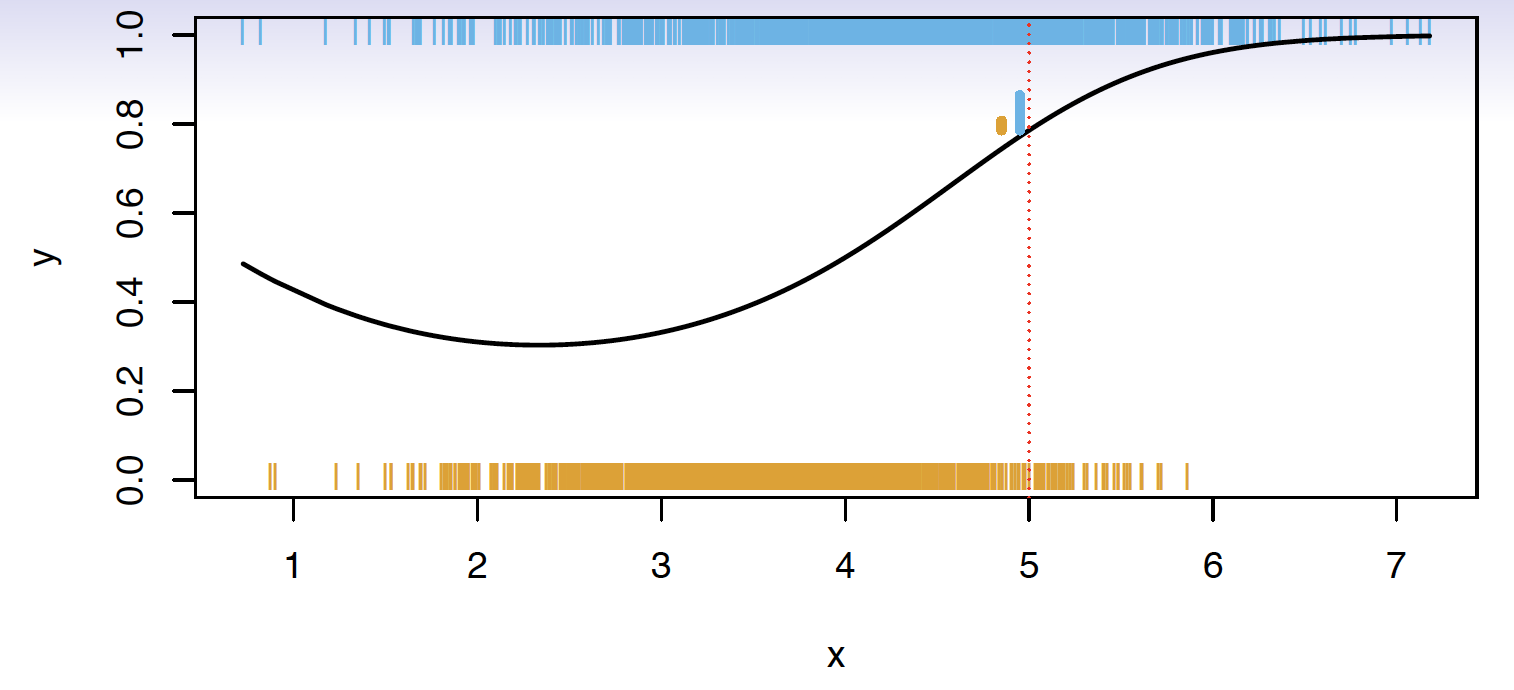

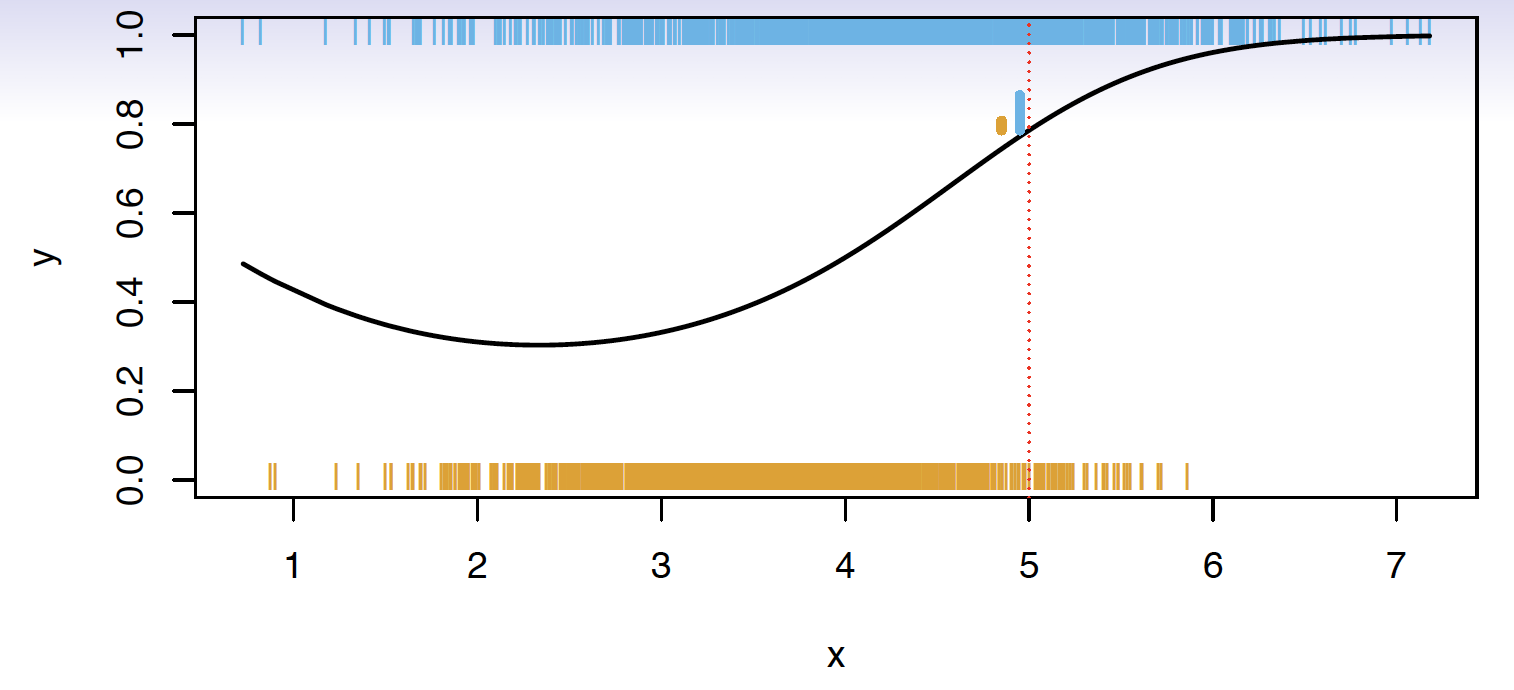

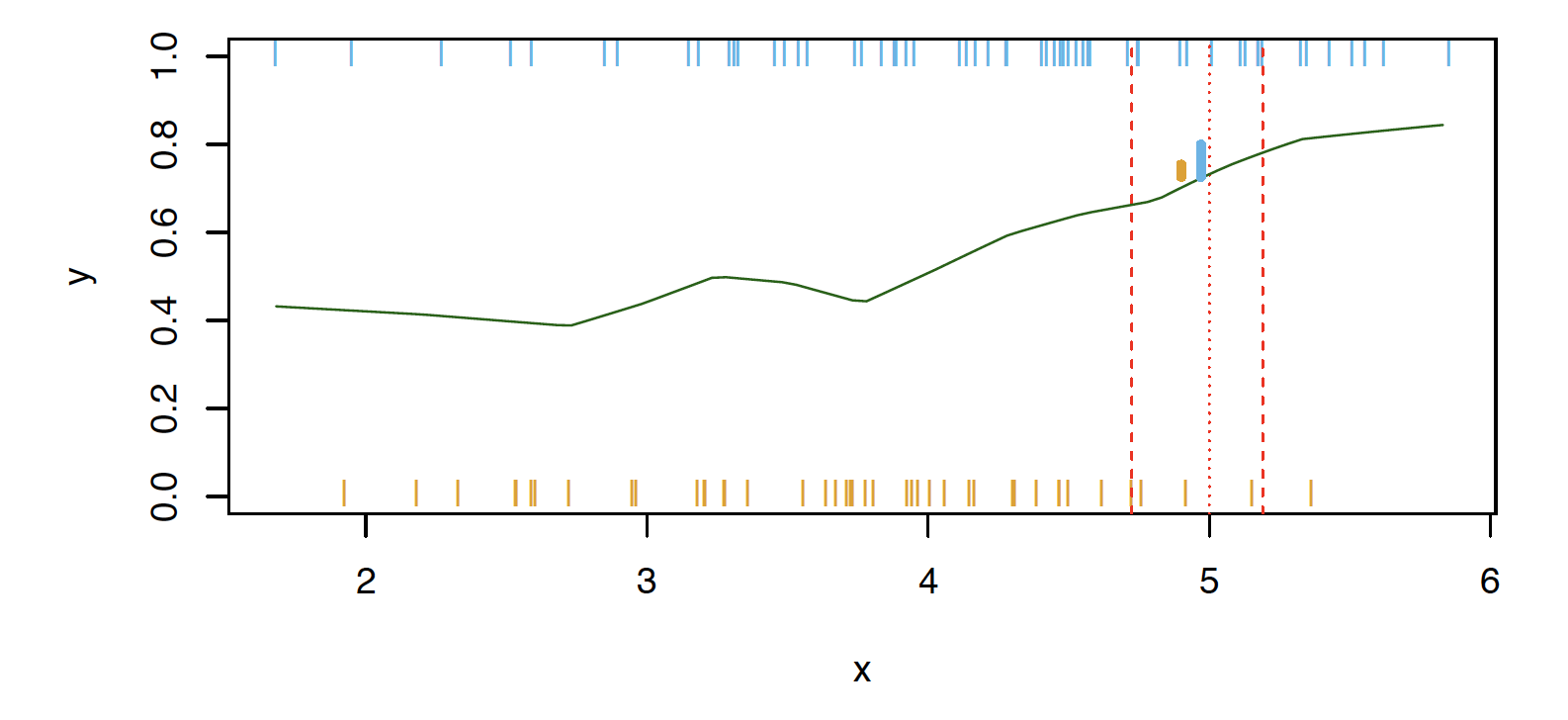

Suppose there are K elements in C, numbered 1,2,…,K

pk(x)=P(Y=k|X=x),k=1,2,…,K These are conditional class probabilities at x

Suppose there are K elements in C, numbered 1,2,…,K

pk(x)=P(Y=k|X=x),k=1,2,…,K These are conditional class probabilities at x

How do you think we could calculate this?

Suppose there are K elements in C, numbered 1,2,…,K

pk(x)=P(Y=k|X=x),k=1,2,…,K These are conditional class probabilities at x

How do you think we could calculate this?

- In the plot, you could examine the mini-barplot at x=5

Suppose there are K elements in C, numbered 1,2,…,K

pk(x)=P(Y=k|X=x),k=1,2,…,K These are conditional class probabilities at x

- The Bayes optimal classifier at x is

C(x)=j if pj(x)=max{p1(x),p2(x),…,pK(x)}

- Notice that probability is a conditional probability

- It is the probability that Y equals k given the observed preditor vector, x

- Let's say we were using a Bayes Classifier for a two class problem, Y is 1 or 2. We would predict that the class is one if P(Y=1|X=x0)>0.5 and 2 otherwise

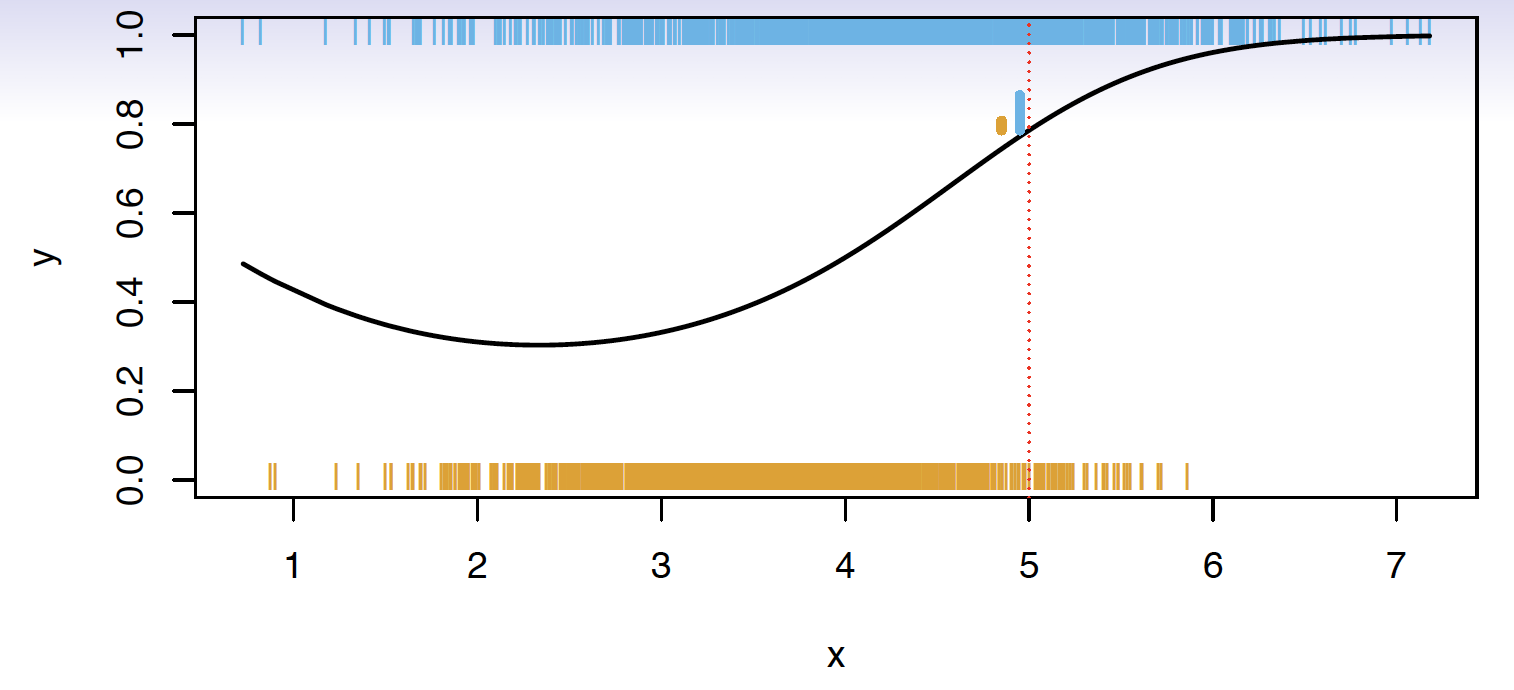

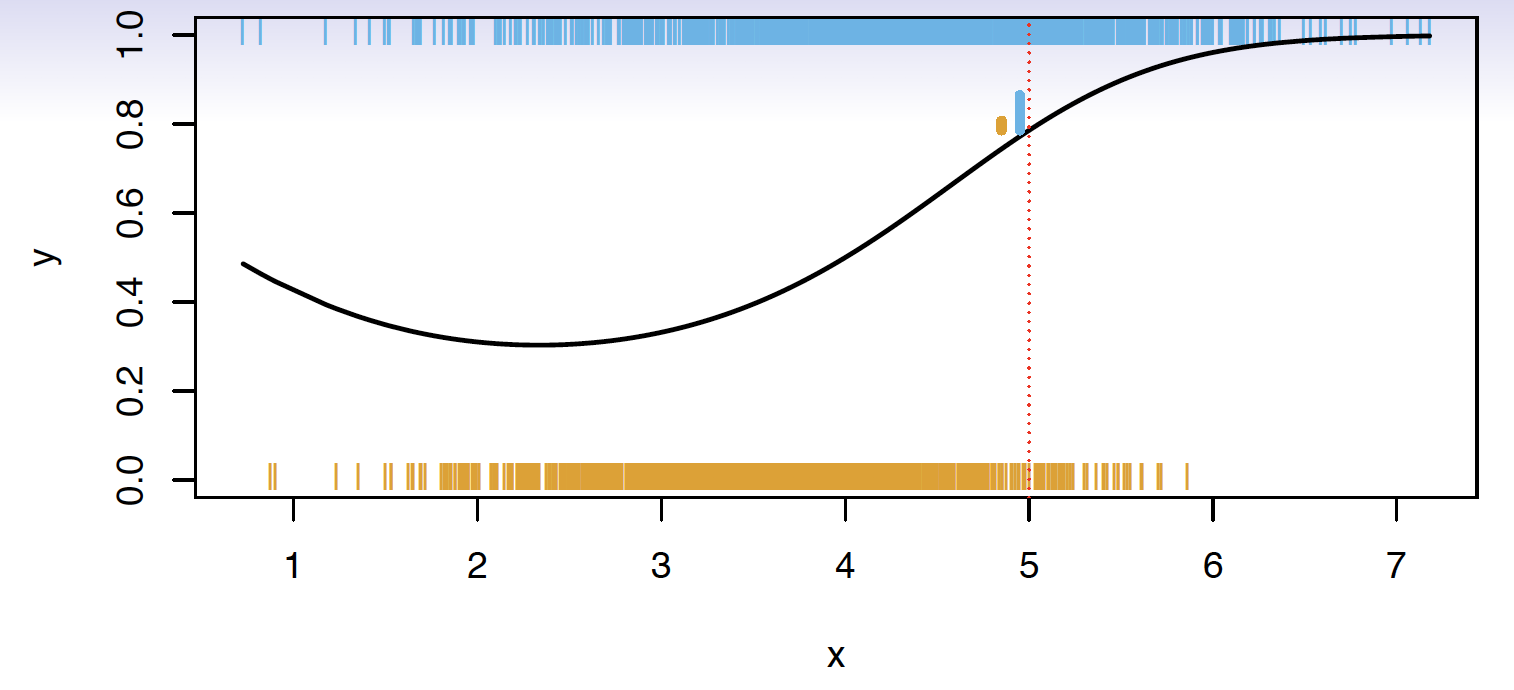

What if this was our data and there were no points at exactly x=5? Then how could we calculate this?

What if this was our data and there were no points at exactly (x = 5)? Then how could we calculate this?

- Nearest neighbor like before!

What if this was our data and there were no points at exactly (x = 5)? Then how could we calculate this?

- Nearest neighbor like before!* This does break down as the dimensions grow, but the impact of ^C(x) is less than on ^pk(x),k=1,2,…,K

Accuracy

- Misclassification error rate

Errtest=Avei∈testI[yi≠^C(xi)]

Accuracy

- Misclassification error rate

Errtest=Avei∈testI[yi≠^C(xi)]

- The Bayes Classifier using the true pk(x) has the smallest error

Accuracy

- Misclassification error rate

Errtest=Avei∈testI[yi≠^C(xi)]

- The Bayes Classifier using the true pk(x) has the smallest error* Some of the methods we will learn build structured models for C(x) (support vector machines, for example)

Accuracy

- Misclassification error rate

Errtest=Avei∈testI[yi≠^C(xi)]

- The Bayes Classifier using the true pk(x) has the smallest error Some of the methods we will learn build structured models for C(x) (support vector machines, for example) Some build structured models for pk(x) (logistic regression, for example)

- the test error rate Avei∈testI[yi≠^C(xi)] is minimized on average by very simple classifier that assigns each observation to the most likely class, given its predictor values (that's the Bayes classifier)

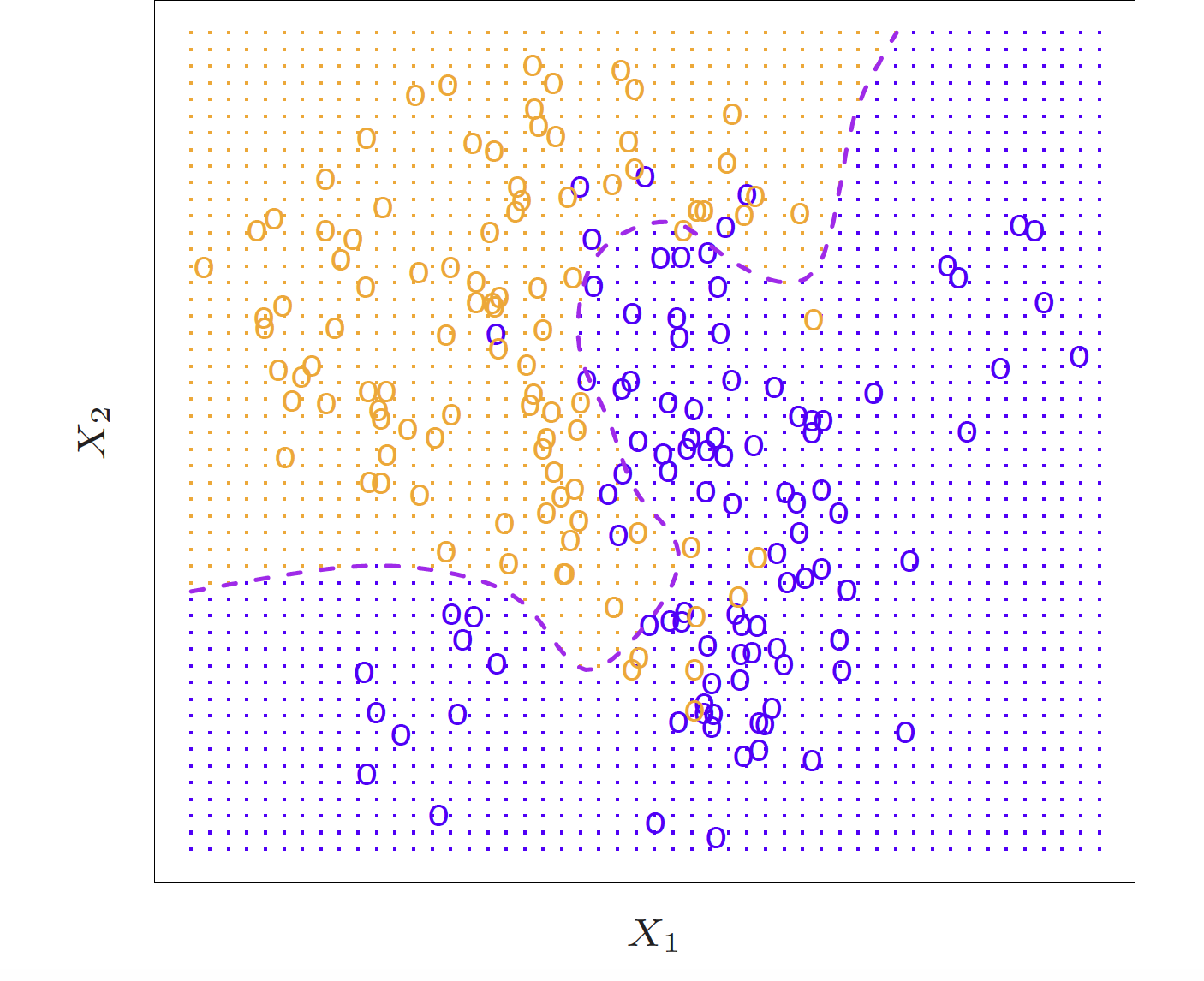

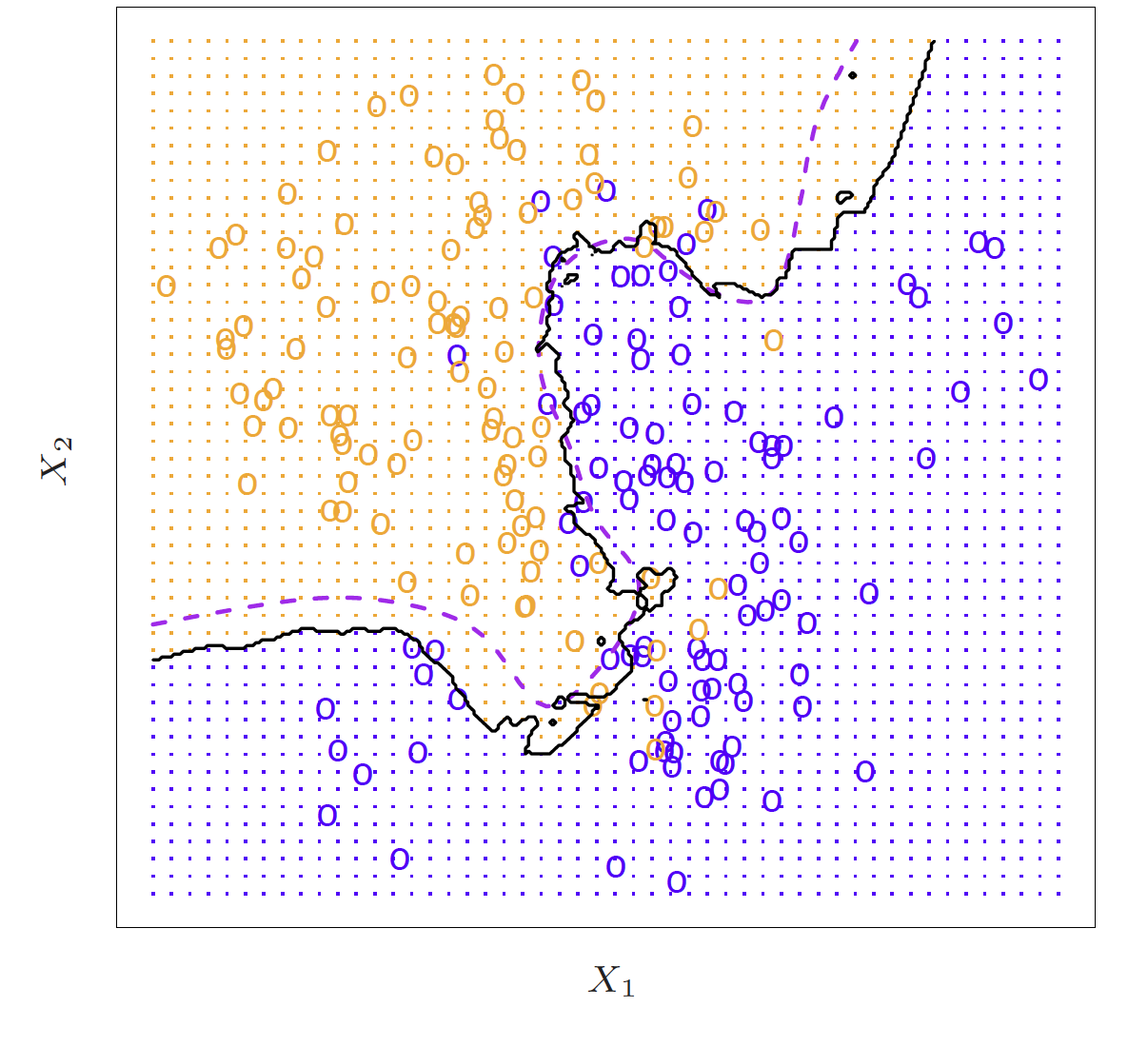

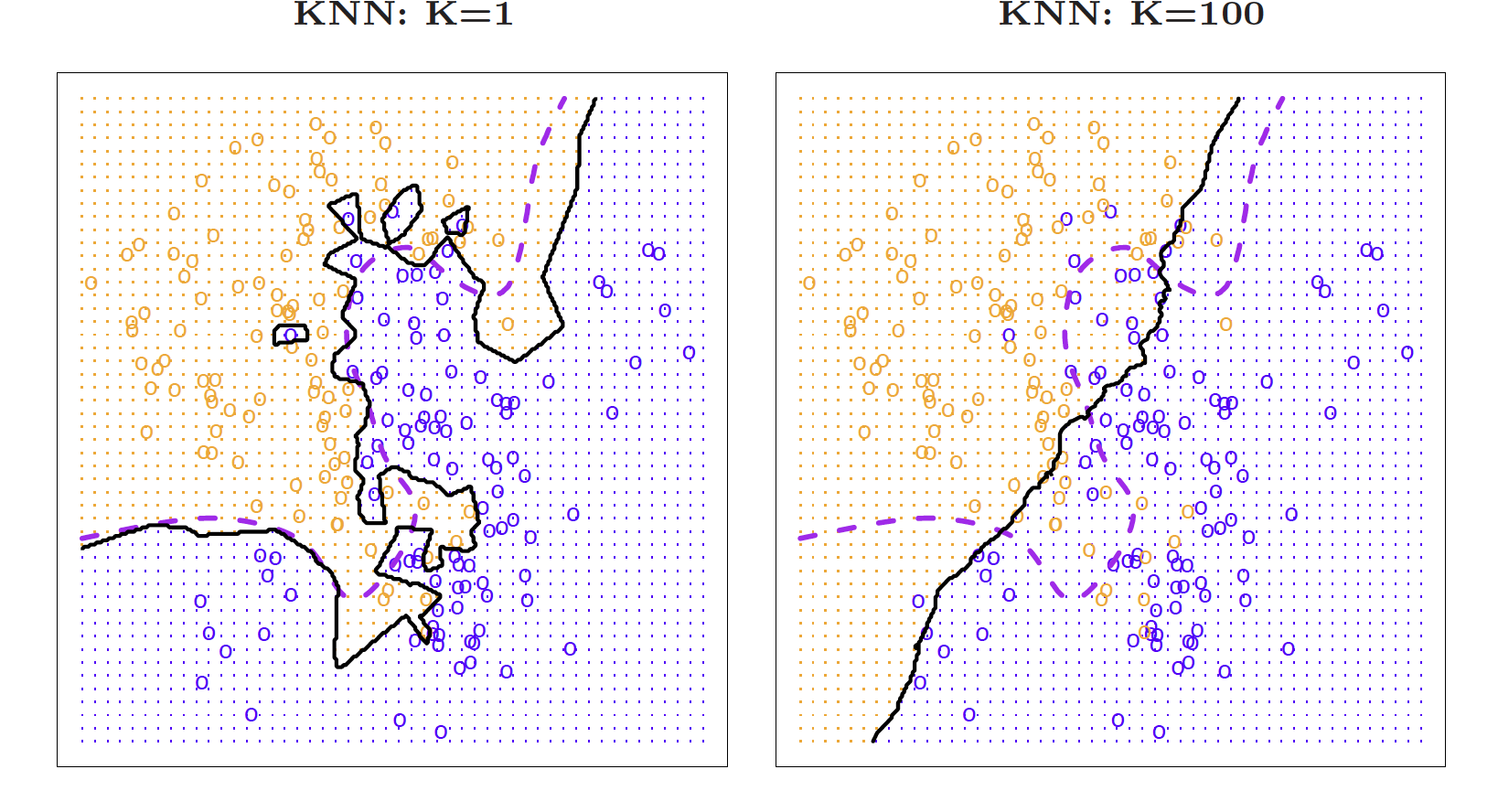

K-Nearest-Neighbors example

- Here is a simulated dataset of 100 observations in two groups, blue and orange

- The purple dashed line represents the Bayes decision boundary

- The orange background grid indicates the region where the test observations will be classified as orange, and the blue for the blue

- We'd love to be able to use the Bayes classifier to but for real data, we don't know the conditional distribution of Y given X so computing the Bayes classifier is impossible

- Alot of methods try to estimate the conditional distribution of Y given X and then classify a given observation to the class with the highest estimated probability

- One method to do this is K-nearest neighbors

KNN (K = 10)

- Again, the way KNN works is if K = 10, it is finding the 10 closest observations and calculating the probability of being orange or blue and will classify that point as such

- So here is an example of K nearest neighbors where K is 10

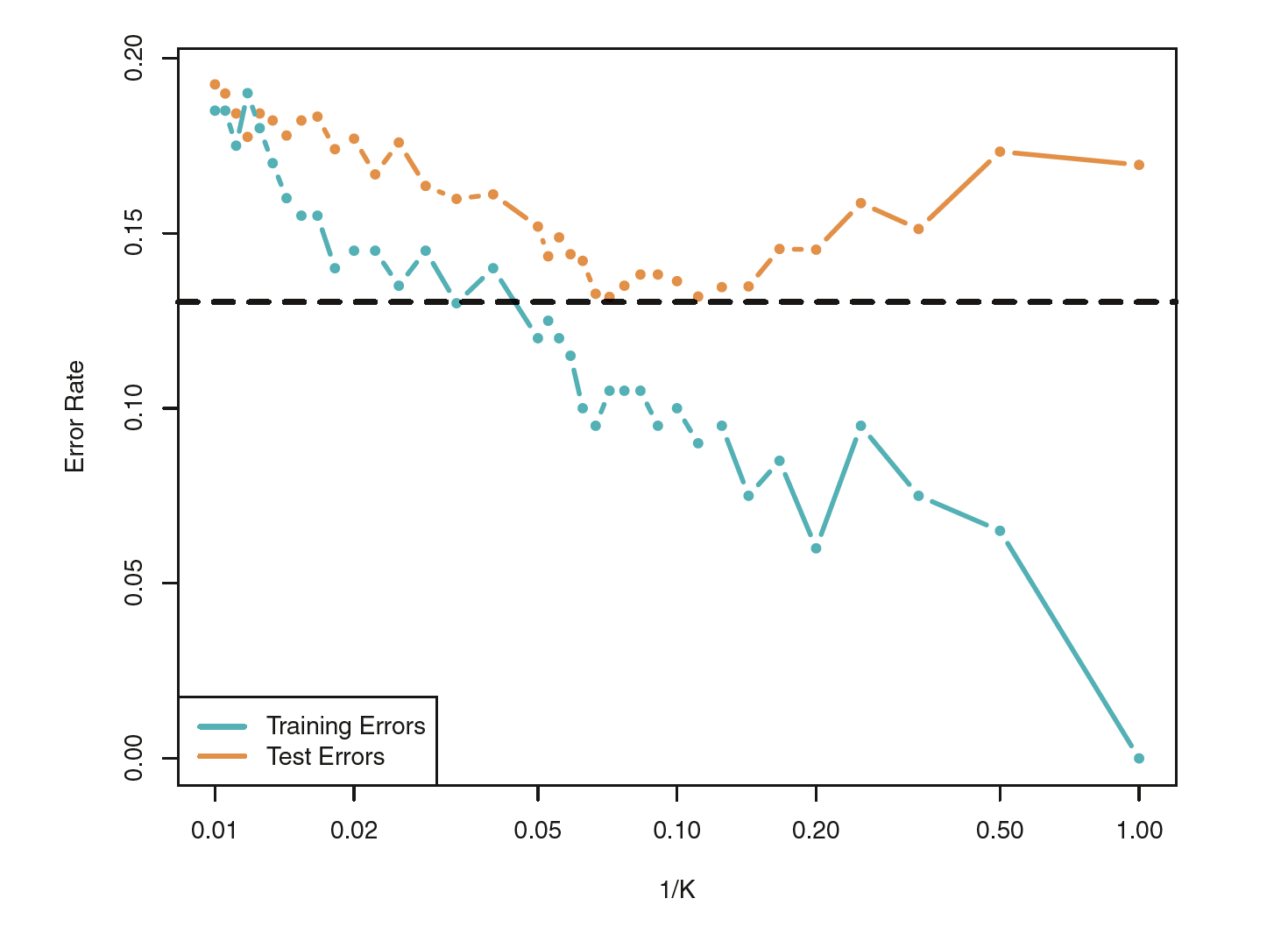

KNN

- Because this dataset has 100 data points, K can range from 1 to 100 where at 1, the error rate in the TRAINING data will be 0 but the test error rate may be really high. So we are trying to find the happy medium. The test error is going to have that same u-shape relationship, you want to find the bottom of that U

Trade-offs

📖 Canvas

- use Google Chrome